Visoric at the Festival of the Future 2025 at the Deutsches Museum

Photo: XR Stager News Room / Ulrich Buckenlei

Reality Check with the Robo Dog – Humans, Machines and Mixed Reality Put to the Test

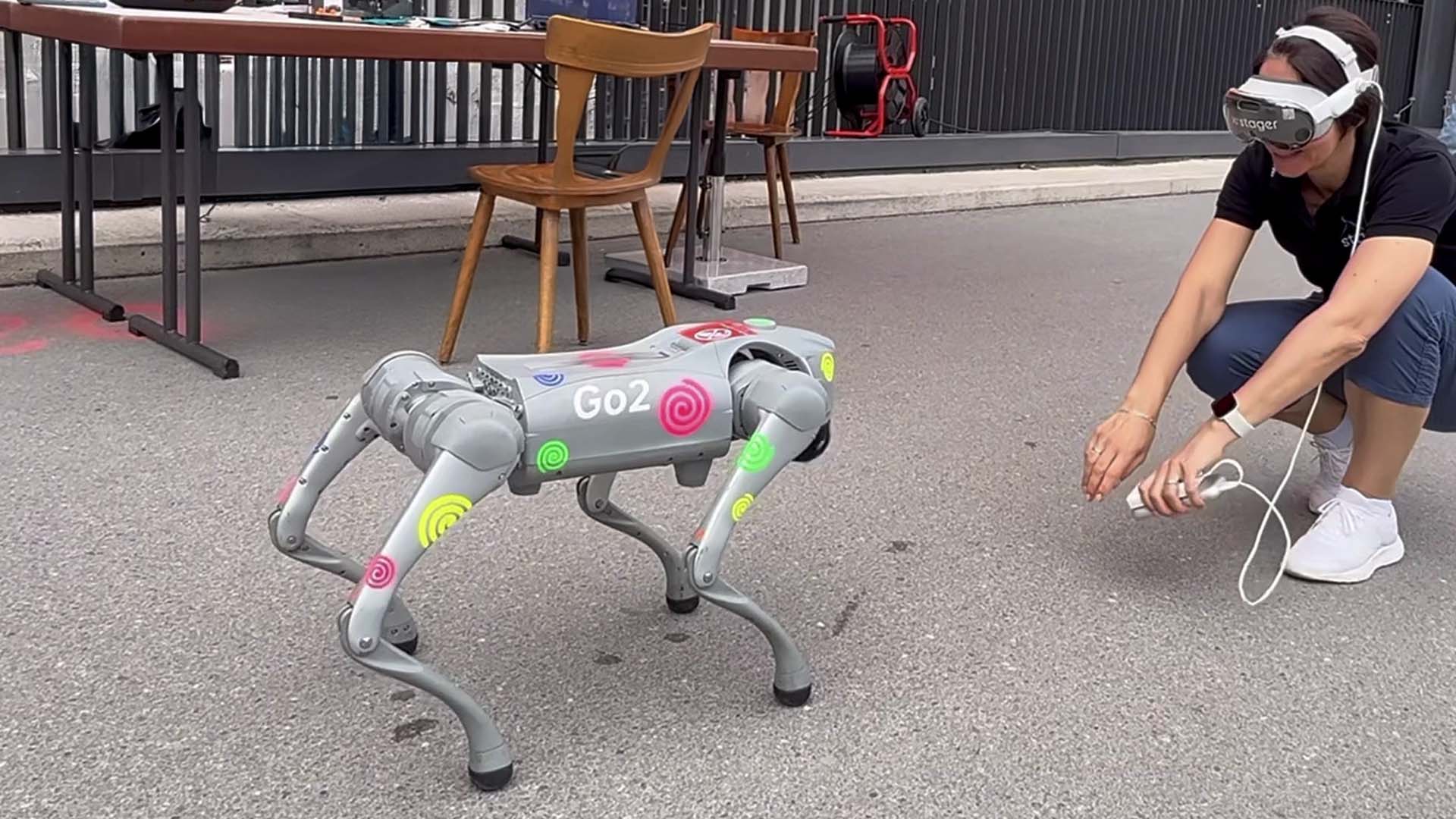

How real is the vision of intelligent human-machine interaction supported by XR headsets and autonomous robots? At the Festival of the Future at the Deutsches Museum in Munich, this question became tangible – literally. The Visoric team, in collaboration with Nataliya Daniltseva, presented a public test setup that combined real-world robotics with immersive technology. Wearing an Apple Vision Pro and facing a Unitree robotic dog, the scene resembled a glimpse into the future – even if the technologies were not yet fully connected.

This very gap was part of the experiment: What if? What if XR, AI and robotics truly communicated in real time? The showcase powerfully illustrated how close we already are to this reality – and what steps are needed for a true technological breakthrough to emerge from a simple reality check.

Interaction with robotic dog and Apple Vision Pro

Photo: XR Stager News Room / Ulrich Buckenlei

Gesture Control Meets Reality – Rethinking Intuitive Control

At the heart of the experiment was the question of how future autonomous systems will be controlled. The test subject moved their hands in front of the robot – the robot appeared to respond to the gestures. But in truth, there was no direct connection between the device and the dog. This staged gap opened up a fascinating discourse: How can XR technology like the Apple Vision Pro be intelligently linked to robotic control?

- XR headset with 3D mapping: Precisely tracks space, hand movements, and gaze direction

- Robot with real-time sensors: Autonomous behavior controlled via WiFi, AI, and ROS modules

- Connection conceivable: One interface, many new application possibilities

Visitors experienced firsthand how powerful the systems already are individually – and how much potential lies in their integration. For many, the setup felt less like a demonstration and more like an invitation to think further.

Close-up of the Unitree Go2

Photo: XR Stager News Room / Ulrich Buckenlei

From Play to Simulation – Learning Processes Through Immersive Robotics

What at first glance looked like a playground for tech enthusiasts also had a serious educational dimension. The combination of XR glasses and robotics enables scenarios where learning content becomes physically tangible. Gestures become commands, gaze directions become control inputs, movements become training data.

- Vocational training and further education: Simulated machine operation without physical risk

- Industrial training environments: Intuitive interaction between humans and machines

- Research and teaching: Data-driven feedback from real interaction

Especially for complex systems such as those found in logistics, manufacturing, or autonomous fleets, this combination opens up entirely new avenues of human-robot collaboration.

Visoric test scene with XR and Unitree

Photo: XR Stager News Room / Ulrich Buckenlei

Technical Integration – Where Vision Pro and Robotics Meet

Although there was no direct connection between the Apple Vision Pro and the Unitree robot in the experiment, the technological foundation is already in place. The headset detects hands, eyes, spatial depth. The robot understands WiFi commands, uses neural networks, and can be linked with ROS. Full integration is not only possible but likely.

- Technological synergy: XR perceives the space, robotics acts within it

- Shared data foundation: Sensor and position data can be linked

- Predictive systems: The robot learns to interpret human intentions

This perspective opens new horizons – not only for developers but also for companies aiming to adopt adaptive automation.

The Visoric expert team in 3D, AI XR and Robotics

Visualization: XR Stager News Room / Ulrich Buckenlei

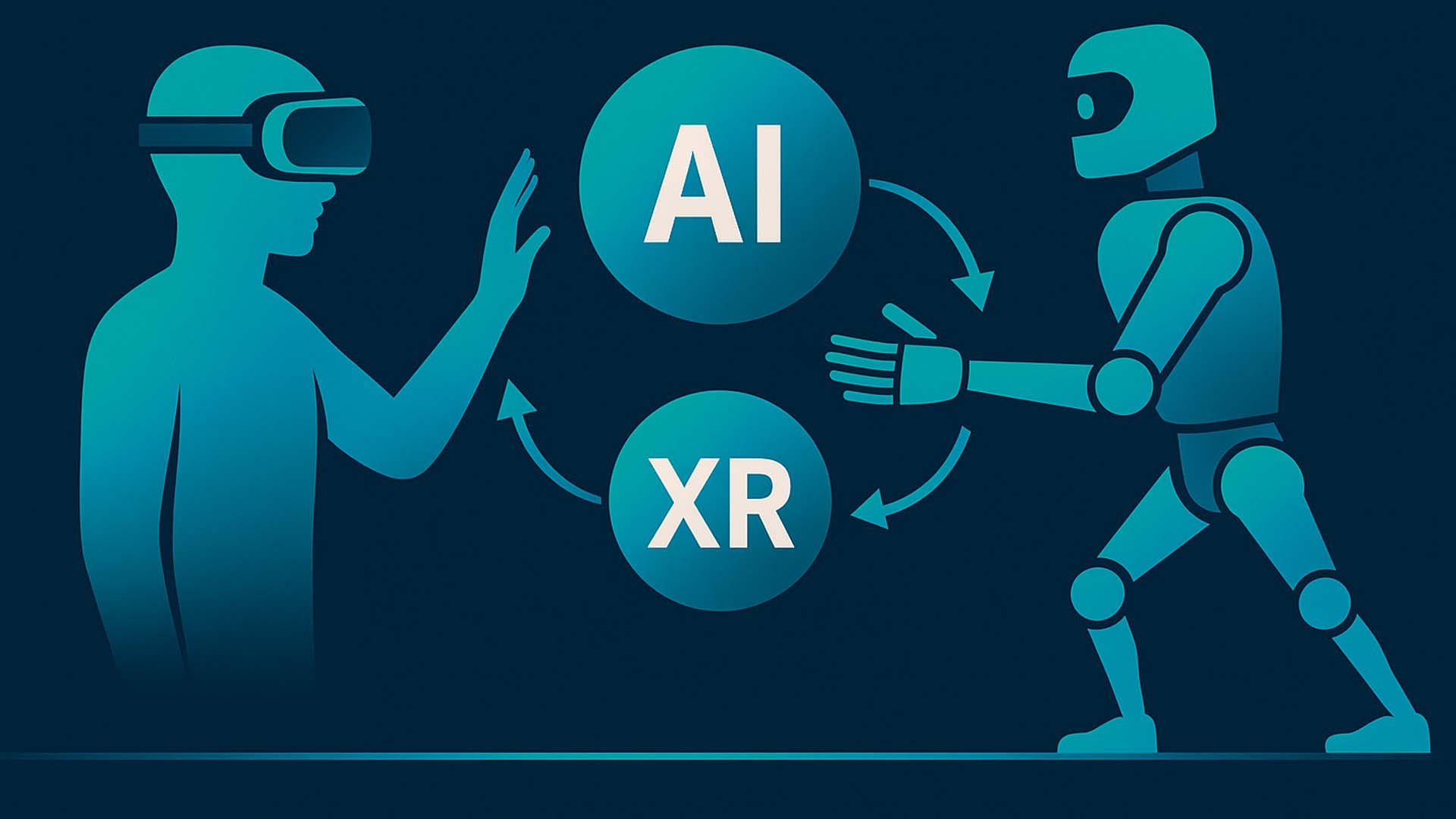

Immersive Robotics in Industry – A New Interface

When AI, spatial awareness, and robotic behavior merge, more than just an assistance system is created: an adaptive partner emerges that acts with contextual awareness. Especially in manufacturing, logistics, or training, we need systems that not only execute commands but also anticipate needs.

- Predictive maintenance: Robots detect conditions and respond dynamically

- Collaboration instead of control: Human gestures become a shared language

- New UX for machines: XR becomes the interface for robotic systems

This new interface – between machine and human – will fundamentally change how we experience technology. And it begins with the first prototype, the first test, the first question: What if?

Interactive human-machine coordination

Photo: XR Stager News Room / Ulrich Buckenlei

Video: Robo Dog Meets XR – A Scene with Symbolic Power

A scene straight out of the future: XR headset, gestures, a robotic dog – and a thought that lingers. The interaction may still be simulated, but it stands as a symbol for a technological reality that could soon arrive.

by Visoric XR Studio | Reality Check with Vision Pro and Robotic Dog

Video: © Visoric GmbH | 1E9 & Festival of the Future, Deutsches Museum Munich

Contact Our Expert Team

The Visoric team supports companies in developing and implementing intelligent XR and robotics solutions – from prototype to industrial application.

- XR interfaces for robotics: Mixed reality meets real-time control

- Adaptive training solutions: Immersive scenarios for human-machine interaction

- Consulting & development: From idea to real product – in collaboration with you

Get in touch now – and let’s shape the future of interaction together.

Kontaktpersonen:

Ulrich Buckenlei (Kreativdirektor)

Mobil: +49 152 53532871

E-Mail: ulrich.buckenlei@visoric.com

Nataliya Daniltseva (Projektleiterin)

Mobil: + 49 176 72805705

E-Mail: nataliya.daniltseva@visoric.com

Adresse:

VISORIC GmbH

Bayerstraße 13

D-80335 München