Image: Digital vehicle model in real-time simulation space

Image: © Ulrich Buckenlei | Visoric GmbH 2025

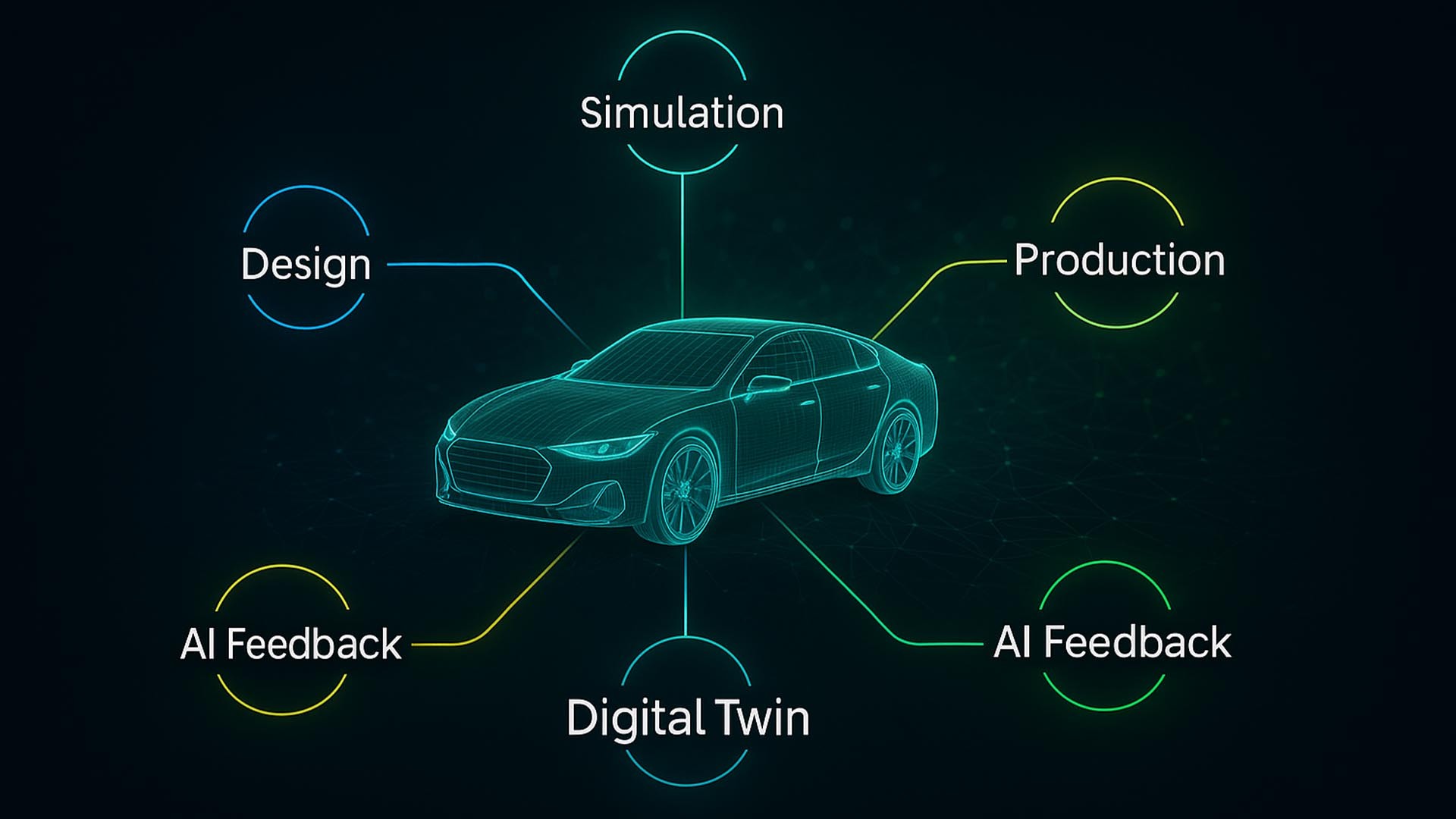

Vehicles are increasingly created in digital worlds long before the first prototype is built. 3D models, artificial intelligence, and physical simulations merge into a learning ecosystem that connects design, testing, and production in real time. What once happened sequentially now occurs in parallel – faster, more precise, and traceable. This is exactly where the new era of automotive intelligence begins.

Virtual intelligence meets mechanical precision

The development of modern vehicles no longer begins with clay and wind tunnels but with digital twins. In high-resolution 3D environments, aerodynamics, material behavior, and driving physics are simulated – continuously analyzed by AI that recognizes patterns, compares variants, and prepares decisions. Virtual test series replace entire hardware-intensive phases without losing significance.

- Predictive design → Vehicles learn in virtual space

- Real-time simulation → Wind tunnel and road merge

- Data loops → Every test changes real parameters

Image: AI-assisted aerodynamic simulation in the digital development space | Visualization: NVIDIA Omniverse

This approach fundamentally changes the role of the 3D model: from static data carrier to active participant in the development process. Every virtual iteration measurably flows back into the mechanics. This creates a reliable foundation on which teams can make faster decisions – with greater confidence in the results as they move to the next chapter.

The digital twin as a neural hub

The digital twin connects areas that were previously separate: engineering, UX, and manufacturing. It is a database, simulation core, and interface in one – a living reference point that aligns all participating disciplines. Companies like Mercedes-AMG and BMW use such models to test variants, evaluate ergonomics, and prepare production steps.

- Simulation + Sensorics + Control = Adaptive design intelligence

- Virtual sensors provide real-time data for AI models

- Every movement in the 3D model replaces physical prototypes

Image: The digital twin connects design, simulation, and control in real time | Visualization: Visoric GmbH 2025

In practice, this means that changes to geometry, software, or parameters are no longer evaluated in isolation. The twin propagates them throughout the entire system – including feedback from testing and production. This synchronous mode reduces friction, accelerates learning cycles, and seamlessly prepares for the next step: genuine interaction between human and machine.

From simulation to interaction

Touch, gestures, gaze: modern HMIs make complex functions tangible. Mixed-reality cockpits become laboratories where designers and engineers can experience behavior before it exists. The key lies in connecting data with emotion – the immediate feeling that a design responds correctly.

- Immersive human-machine interfaces (HMI)

- Mixed-reality cockpits as test environments

- Data-to-emotion → Design reacts to user intent

Image: Mixed-reality interface for design and UX testing | Visualization: Visoric GmbH 2025

When real interaction meets simulated mechanics, a model becomes an experience. Teams can sense latency, transitions, and confirmations – embedding quality criteria that will later define production standards. This approach paves the way for factories where simulation and production form one coherent system.

Gaming engines & AI – the new automotive factory

Game engines have long become industry standards. Unreal, Unity, and Omniverse not only visualize – they simulate: physics, light, materials, collaboration – in real time and interconnected. AI tools prepare data, generate variants, and check constraints. The result is a creative yet highly precise working environment.

- Gamification in engineering

- Real-time collaboration with AI tools

- Industrial metaverse → Production meets simulation

Image: AI-supported real-time development with Unreal Engine | Visualization: Visoric GmbH 2025

Assembly sequences, logistics flows, and safety distances can be experienced before the first production line exists. Decisions become explainable, investments transparent. This is where the scientific perspective begins: how precise is the link between input and response?

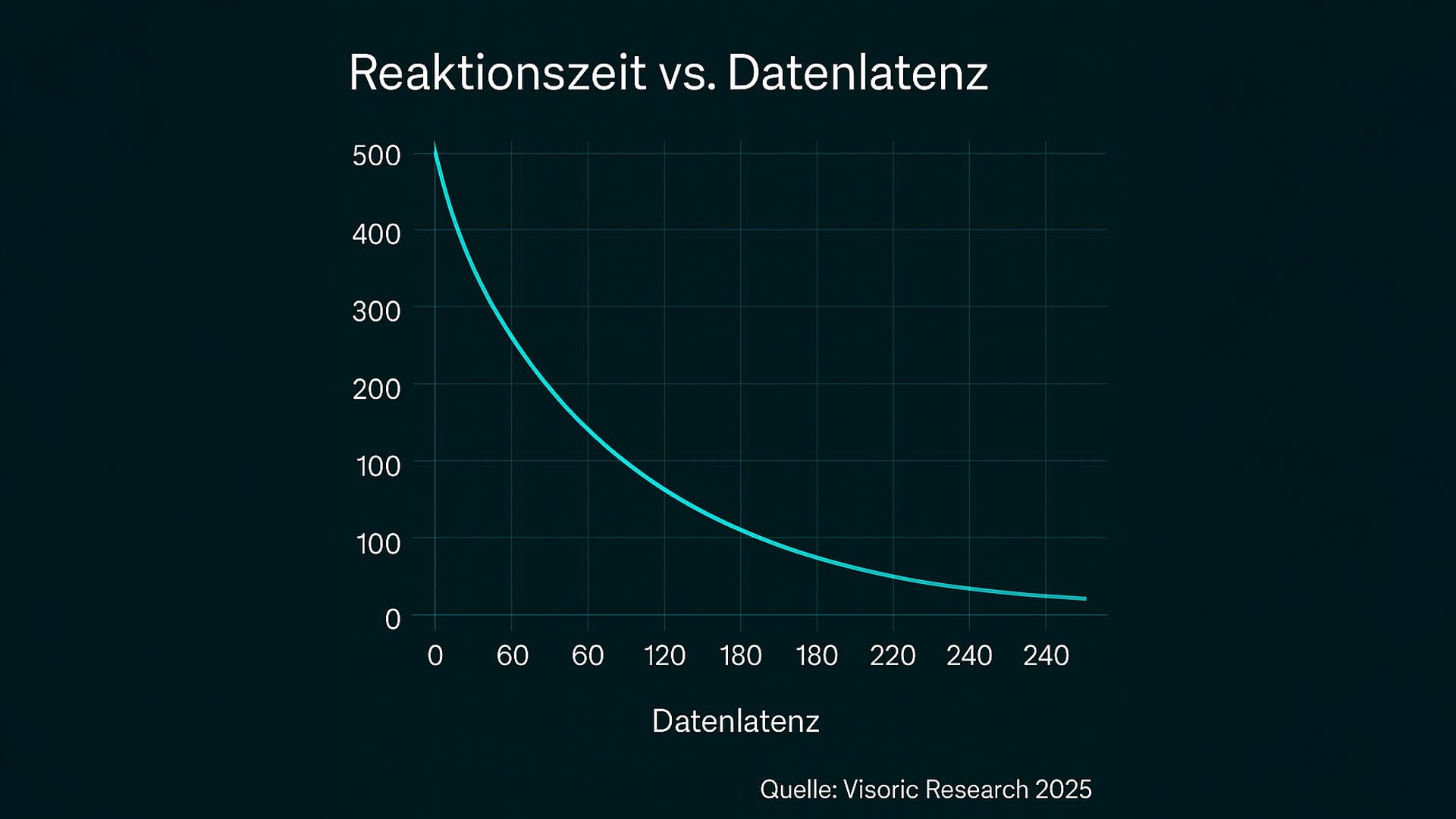

Scientific perspective – synchrony in real time

A key question is: how small is the latency between human action and visual feedback? Systems that synchronize rendering, tracking, and data access create sensory coherence – the feeling that everything reacts “as one.” This coherence is measurable and correlates with precision and trust.

Diagram: Response time vs. data latency | Source: Visoric Research 2025

The diagram illustrates that when real-time rendering and data paths are optimized, the perceived delay between input and output decreases significantly. This increases accuracy in interactions, reduces errors, and provides a robust foundation for transfer into industrial environments where every millisecond counts.

Industrial metaverse & cross-industry impact

The 3D intelligence of the vehicle is not an isolated solution. The same principles drive robotics, energy, and production systems: a shared 3D space where data, people, and machines work in sync. OEMs and suppliers share models, test processes, and orchestrate supply chains – live and traceable.

- Shared 3D platforms between OEMs and suppliers

- Transfer from automotive to Industry 4.0

- The future is not built – it is simulated

Image: 3D simulation networks in the industrial metaverse | Visualization: Visoric GmbH 2025

What began in automotive as aerodynamic optimization is now scaling up to the planning of entire factories. When everyone looks at the same model – and changes it in real time – decisions become faster, risks smaller, and quality more consistent. Before the call to action, a short example demonstrates the power of this connection.

Interactive vehicle control – when 3D becomes the interface

The video example shows a vehicle UI directly based on an interactive 3D game model. Functions like spoilers, lights, or suspension modes can be toggled in real time – visible, tangible, and without detours through abstract menus. The combination of physics simulation, rendering, and interface logic creates an immediate sense of control.

- Real-time feedback between touch gesture and mechanical response

- A UI based on a 3D game model that visualizes states

- Transferable to robotics, production cells, and industrial dashboards

© Video: VIP Collector I Design Gorden Wagner I Engineering: Mercedes-AMG

The clip illustrates how control, visualization, and physics merge into a seamless experience: every input changes the model – and the model drives the real function.

Shaping the future – from 3D intelligence to real performance

The Munich-based Visoric team supports companies in translating digital twins, real-time simulation, and intuitive interfaces into productive solutions. From the first idea to a production-ready application, clear steps, measurable results, and tangible value emerge.

- Consulting & concept: from use case to reliable roadmap

- Development & integration: AI, XR, real-time 3D, and data connectivity

- Rollout & training: secure implementation, scaling, and enablement

Together, we find the fastest path from virtual testing to real performance – precise, transparent, and inspired by excellent design.

Contact Persons:

Ulrich Buckenlei (Creative Director)

Mobile: +49 152 53532871

Email: ulrich.buckenlei@visoric.com

Nataliya Daniltseva (Project Manager)

Mobile: +49 176 72805705

Email: nataliya.daniltseva@visoric.com

Address:

VISORIC GmbH

Bayerstraße 13

D-80335 Munich