Image: Empathic interaction in public space. A simple gesture moment becomes an effective donation. Source: MISEREOR / Kolle Rebbe, 2025

At the core of contemporary brand experiences, artificial intelligence, 3D/XR, and interaction design merge. Passive displays turn into impactful experiences that appear exactly where people are emotionally engaged. Complex messages become understandable, decisions easier, and impact visible.

Through adaptive systems, computer vision, and spatial perception, interactions become meaningful. AI recognizes intentions, confirms actions, and visualizes immediate impact. A new quality of experience design emerges, where perception, meaning, and action converge.

From Message to Experience

Artificial intelligence fundamentally changes the language of brand communication. It is no longer about sending information but about creating experiences that adapt in real time. Where brochures, videos, or presentations once defined perception, today interaction in space is what counts. Systems recognize who moves where, when, and how. They analyze gaze direction, gestures, emotions, and ambient sounds to develop situational understanding. Messages reach people not only through text or sound but through meaning in context.

Smart sensors and AI translate complex content into immediate, spatially anchored experiences. A visitor doesn’t just see a product but experiences its function, effect, and value through intuitive cues, light, movement, and feedback. The display responds to them, changing as they approach and intensifying their attention at the precise moment it arises. Communication becomes a living system that does not explain but understands.

- AI recognizes context, intention, and attention

- XR anchors the message where action takes place

- Communication becomes experience, relevance becomes tangible

Impact becomes visible where it is created. | Concept visualization: Ulrich Buckenlei / Visoric GmbH

This new form of mediation connects perception, emotion, and logic. The fusion of visualization, real-time data, and AI creates a precision once unimaginable. Brands can not only tell information but embody it. They create environments that provide orientation, facilitate decisions, and build trust. Where interpretation was once needed, clarity now arises. Instead of linear messages, people experience dynamic, interactive systems that guide without overwhelming.

This creates a new quality of communication – one that does not persuade but involves. A brand is no longer explained; it is experienced. It becomes part of the moment in which meaning emerges.

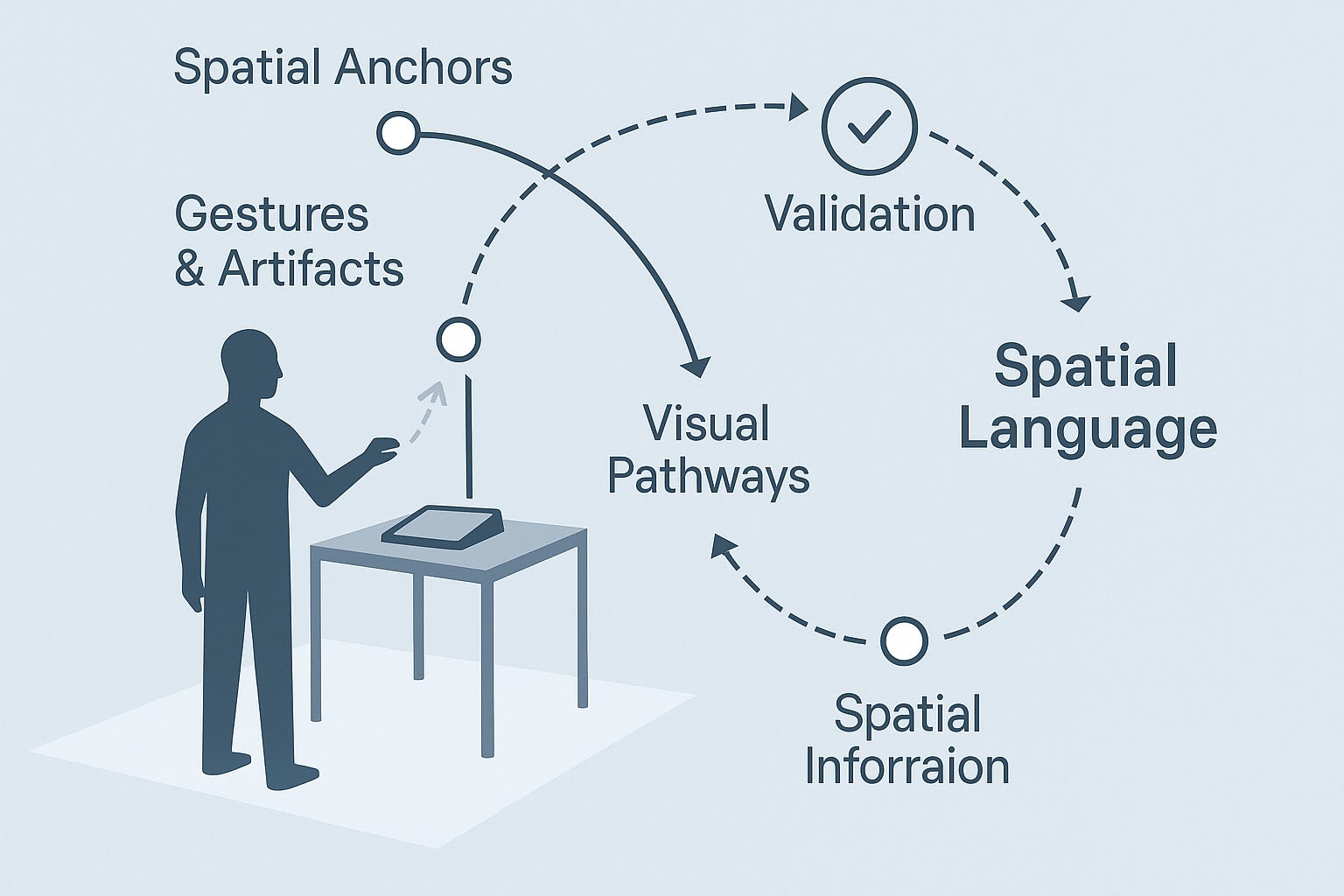

The Language of Space

People, objects, and environments form a spatial system. Mixed Reality makes this relationship visible. Content remains anchored where it is needed and moves with the action. This reduces cognitive load and increases understanding and trust.

- Spatial anchors link information with gestures and artifacts

- Visual paths indicate direction, sequence, and impact

- Validation confirms correct execution in real time

Image: Schematic visualization of spatial cues and interaction paths. Ulrich Buckenlei / Visoric GmbH

The visualization illustrates how information can be spatially anchored in mixed-reality environments. Instead of displaying content on flat screens, data, cues, and interactions are positioned as three-dimensional anchor points in space. These anchors respond to movement, gestures, and gaze direction and remain visible where they are relevant. Through this context sensitivity, a system emerges that dynamically links information with the real environment, fostering orientation, understanding, and confidence.

The illustration highlights the paradigm shift from linear to spatial-cognitive communication. Artificial intelligence analyzes user position, intent, and interaction patterns and translates them into adaptive, spatially bound information paths. This spatial semantics reduces cognitive load and increases information retention. The combination of spatial perception, real-time data, and semantic analysis enables complex relationships to be experienced intuitively – a key development for research, education, and interactive brand communication.

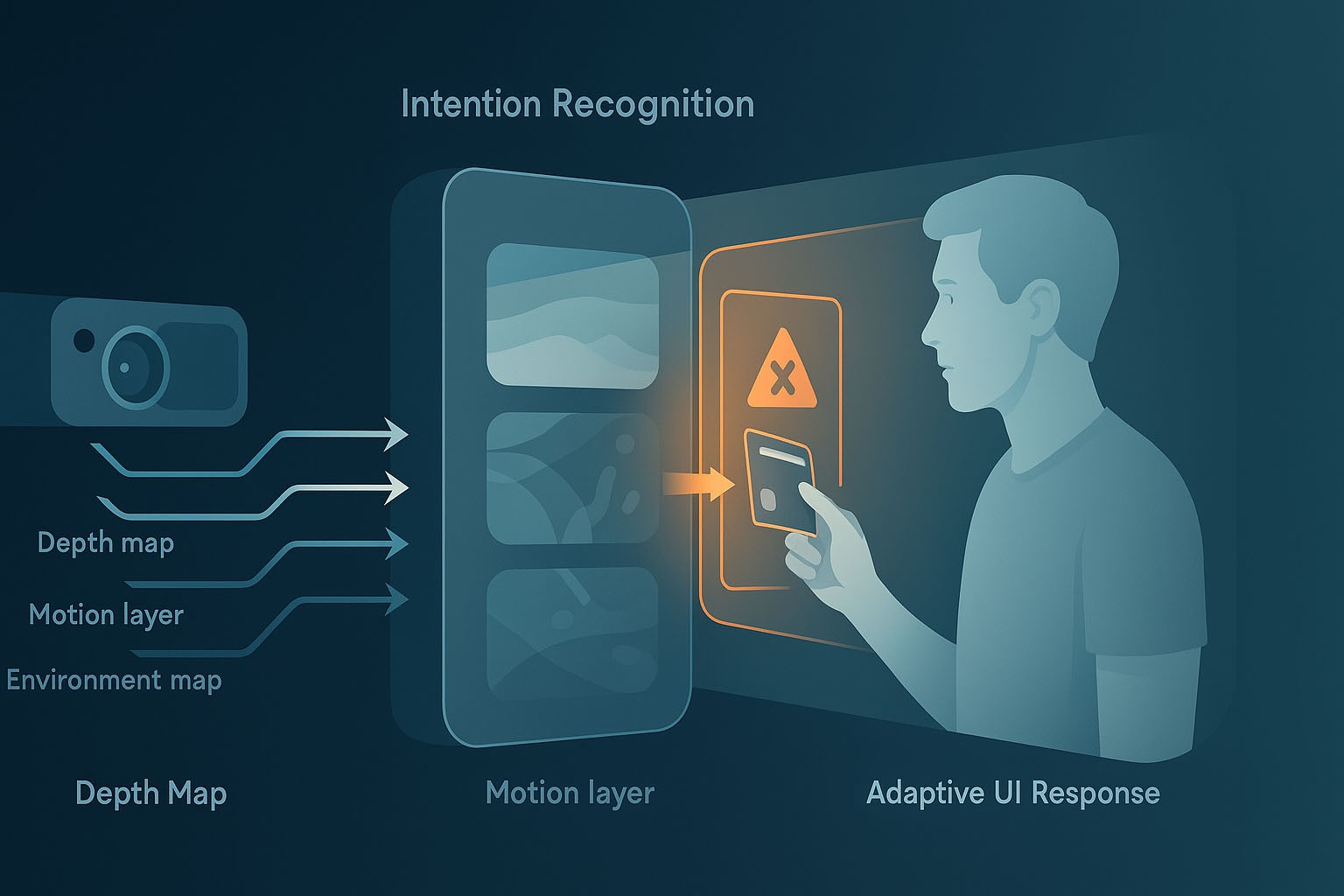

When Machines Recognize Intention

Seeing means understanding. AI systems recognize objects, positions, gestures, and states in real time. They merge video, 3D depth, and user actions into a consistent situational model. From this, adaptive cues arise that adjust to human behavior.

- Real-time recognition of gestures, card swipes, and safety zones

- Comparison of intended and actual interaction

- Adaptive support and early error prevention

Image: Schematic representation of AI-based intention recognition. Ulrich Buckenlei / Visoric GmbH

The visualization shows how modern AI systems combine visual, spatial, and semantic data streams to understand human intentions. Camera and depth sensors provide raw data on position, movement, and gestures. This information is interpreted within a neural model into a dynamic 3D scene that comprehends the context of the action – not only the movement itself but also its meaning. The system detects whether a gesture is an interaction, a correction, or an error attempt and immediately responds with adjusted cues or visual feedback. This creates a responsive, learning communication layer between humans and machines.

The diagram illustrates the shift from classical control to adaptive interaction. Whereas earlier interfaces reacted solely to inputs, today’s AI systems detect patterns, intentions, and deviations in behavioral context. By continuously learning from user behavior, the system refines its predictions and minimizes errors. In industrial, medical, or safety-critical applications, this significantly enhances efficiency and reliability. Scientifically, this marks a turning point: machines no longer react to commands but anticipate intentions – a decisive step toward cognitive assistance systems and empathic technology.

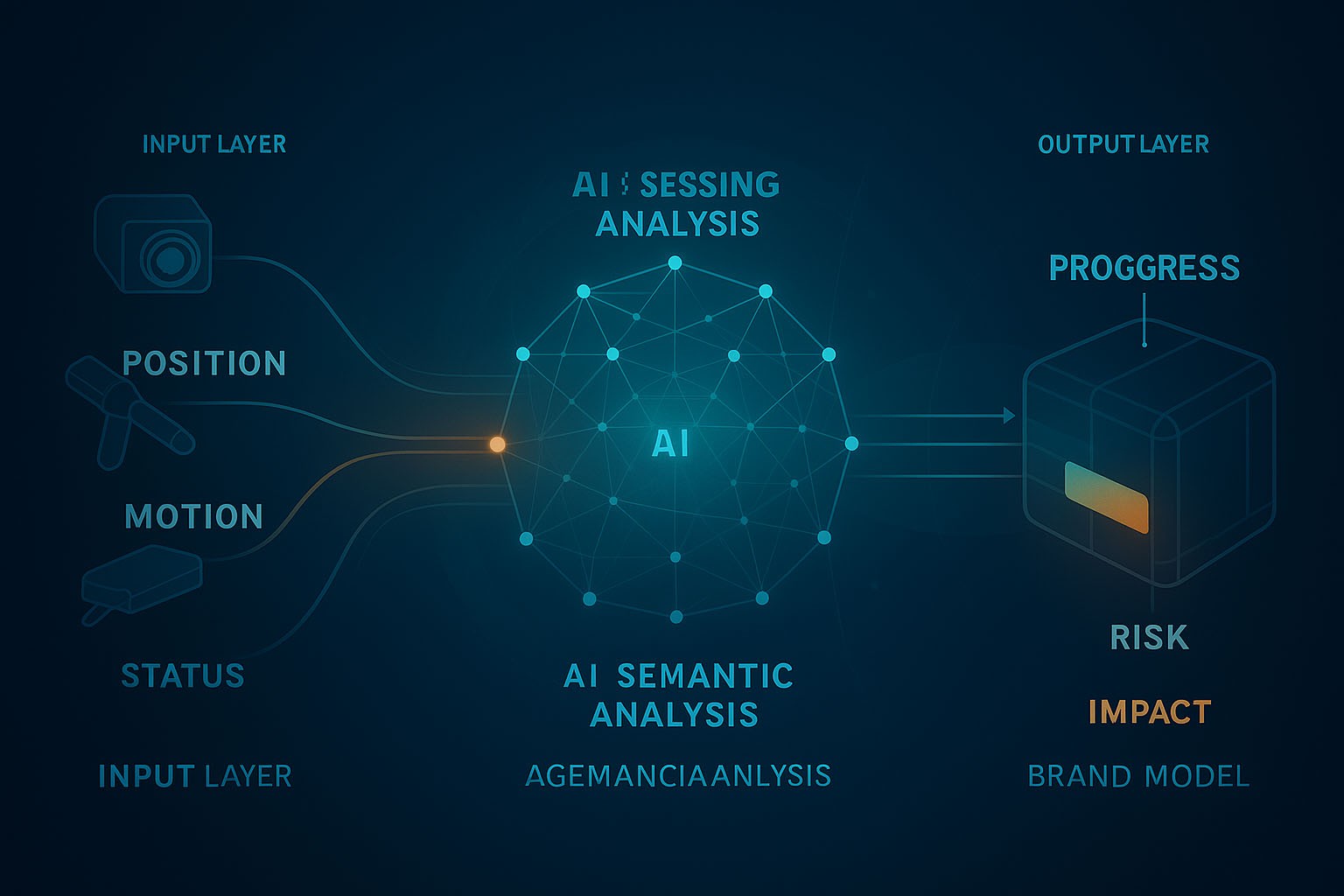

Data Flows in Brand Context

Every interaction generates data – positions, events, states. AI consolidates these signals into meaning. Mixed Reality translates data into understandable, brand-consistent visualizations.

- Processing of image, sensor, and log data in real time

- Consolidation within the spatial brand model

- Visualization of progress, risk, and impact

Image: Schematic representation of AI-driven data flows in brand context. Concept visualization: Ulrich Buckenlei / Visoric Research 2025

The visualization shows how every interaction – whether physical, digital, or immersive – continuously generates data. Sensors, cameras, and system logs provide positional, motion, and status information condensed by AI into contextual signals. These data are analyzed in real time and aligned with brand-specific parameters to assess progress, impact, or risk. The model not only displays metrics but translates them into spatial, intuitively perceptible forms. This makes visible where interaction occurs, how it works, and what quality it holds.

The illustration underlines the transition from pure data collection to semantically connected insight. Through AI-driven data fusion, a situational overview emerges that makes brand communication measurable, traceable, and controllable. Instead of isolated numbers, a visual, shared understanding develops among design, engineering, and management teams. This enables faster decision-making, shorter feedback loops, and early risk detection. Scientifically, this approach marks the shift from “information” to “intelligence in context” – a foundation for data-adaptive brand strategies in the age of mixed reality and generative AI.

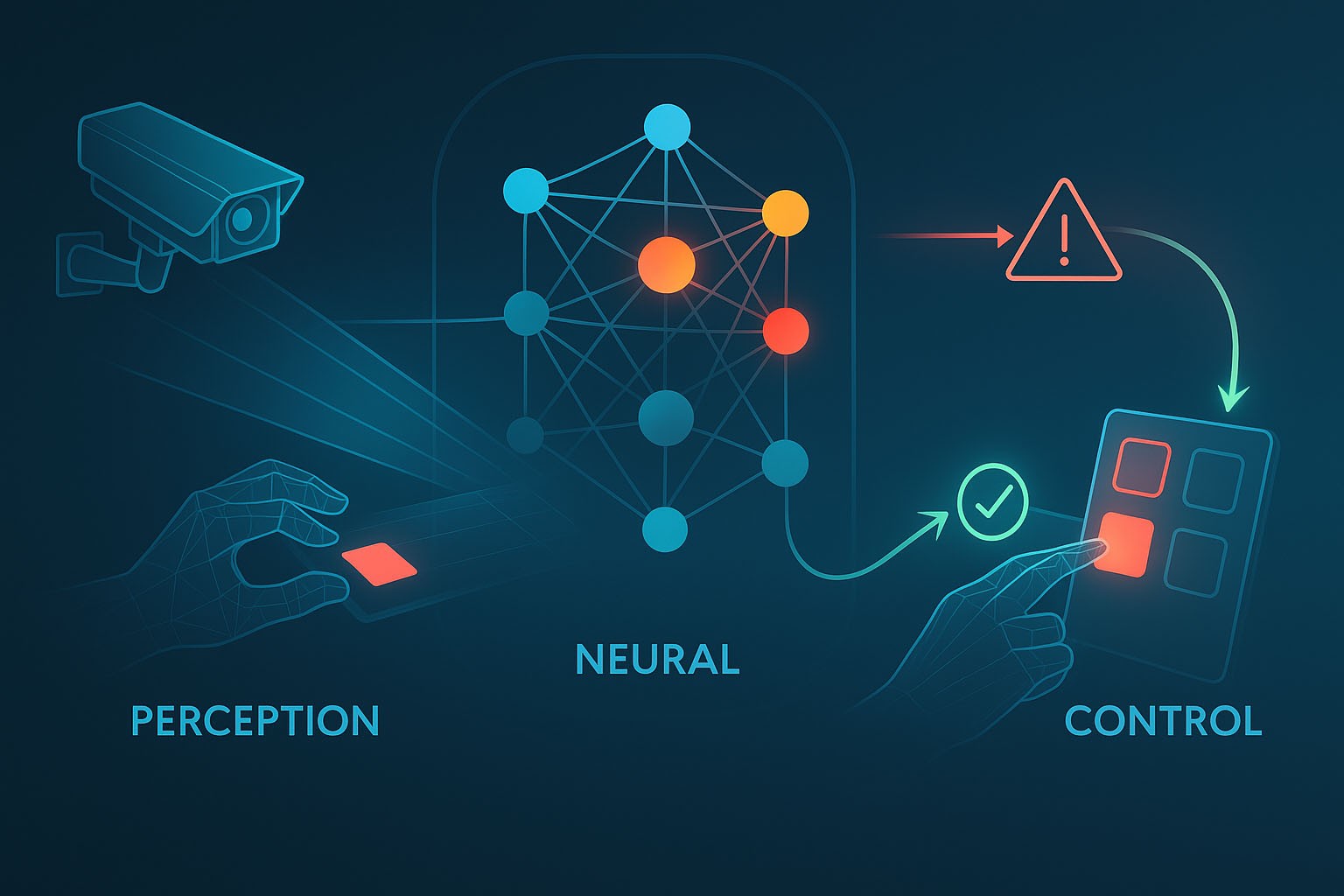

From Perception to Control

The goal is not just support but active assistance. AI detects deviations, suggests corrections, and blocks risky steps. This prevents errors, protects systems, and increases result reliability.

- Automated checks before sensitive actions

- Preventive cues for incorrect order or inappropriate tools

- Recommendations based on proven patterns and data

Image: Schematic representation of an AI-based assistance system for preventive action control. Concept visualization: Ulrich Buckenlei / Visoric Research 2025

The visualization illustrates the transition from reactive support to proactive assistance. While conventional systems merely respond to user actions, the AI model detects patterns and deviations as they emerge. It captures sensory inputs such as gestures, process steps, or tool movements and compares them to stored ideal sequences. When a deviation or risk is identified, the system provides preventive cues or automatically locks critical steps. This creates a mechanism that understands, evaluates, and guides human behavior without limiting autonomy.

The diagram highlights the paradigm shift in human–machine interaction: AI systems no longer act as passive observers but as active partners in decision-making. Using learning models and probabilistic evaluation, they can predict risks and suggest optimizations. In industrial, medical, or safety-critical contexts, this leads to higher process security, lower error rates, and more efficient decision-making. Scientifically, this represents the beginning of a new interaction logic – one where machines not only perceive but share responsibility for human safety and quality.

When Impact Becomes Visible

The combination of the real world and AI-generated overlays brings a new quality of communication. Instead of abstract numbers, the action itself becomes visible. This strengthens understanding within teams and builds trust among stakeholders.

Video: Interactive donation experience in action. The gesture triggers visible impact. Source: MISEREOR / Kolle Rebbe / Editorial: Visoric GmbH

The cinematic perspective shows how message becomes experience. Brands gain speed and quality. People gain orientation and trust.

Experience Science. With AI, 3D, and Spatial Computing.

Practice shows how closely technology, perception, and communication are connected. AI, real-time data, and immersive visualization make the invisible visible and the complex understandable.

As a Munich-based expert team, Visoric supports this evolution at the intersection of technology, communication, and perception. We translate data, systems, and processes into spatially tangible applications for branding, service, and education.

- Development of empathic brand experiences using AI, 3D, and XR

- Integration of real-time data and computer vision into interactive installations

- Scalable solutions for campaigns, retail, events, and social impact

Image: Over 15 years of digital innovation. The Munich team for 3D, AI, and XR. Visualization: Visoric GmbH 2025

Source: Visoric Development Lab | Photo and text: Ulrich Buckenlei

In practice, attention to detail is what matters. Camera perspectives, lighting, and millisecond timing shape the experience. Only when engineering precision and design speak the same language does what Visoric calls “perceptive continuity” emerge – the seamless interplay of real perception and digital intelligence.

Such projects require interdisciplinary thinking. Designers, developers, and engineers work on systems that treat perception as a data source. New standards emerge for campaigns, retail, and education – systems that are not just operated but experienced. Visoric supports this transformation as a partner for companies shaping the next level of digital experiences.

Shaping the Future of Empathic Brand Experiences Together

Would you like to explore the potential of AI-driven, immersive experiences for your brand?

Talk to the Visoric team in Munich. Together, we develop solutions that connect technology, design, and practice – making impact visible, secure, and scalable.

- Consulting, strategy, and prototyping

- Integration of AI, XR, and real-time data into your environment

- Long-term support, scaling, and technical evolution

Now is the right time to use perception as the new interface between people, brands, and machines.

We look forward to your ideas – and to the next level of effective digital experiences.

Contact Persons:

Ulrich Buckenlei (Creative Director)

Mobile: +49 152 53532871

Email: ulrich.buckenlei@visoric.com

Nataliya Daniltseva (Project Manager)

Mobile: +49 176 72805705

Email: nataliya.daniltseva@visoric.com

Address:

VISORIC GmbH

Bayerstraße 13

D-80335 Munich