Ultrathin haptics make digital textures tangible: A futuristic finger model demonstrates VoxeLite – a new technology that precisely simulates touch on smooth displays.

Image: © Ulrich Buckenlei | VISORIC GmbH 2025

Digital surfaces are beginning to change. For a long time, screens were purely visual and auditory media, but current research shows how the sense of touch can also be integrated into digital interaction. The VoxeLite research project at Northwestern University is considered one of the first real steps in this direction. The technology generates tangible microstructures on completely smooth displays and makes digital content physically perceptible for the first time.

What was previously only imaginable in science fiction films – such as feeling a material, a shape or a micro-pattern through a digital surface – is now becoming tangible. VoxeLite’s extremely thin, flexible haptic interface wraps around the fingertip like a second skin and uses microscopic actuator fields to create precise, dynamic friction patterns. This produces tactile textures that have already been identified with impressive accuracy in experiments.

This development marks a decisive moment for the next generation of interfaces. It combines scientific precision with everyday interaction and paves the way for applications far beyond traditional touchscreens – from smart industry to robotics and immersive learning and training environments.

To understand how VoxeLite creates physical textures on digital surfaces, it is worth taking a look at the fundamental principle behind the technology: the targeted manipulation of friction and microstructure on the fingertip, triggered by microscopic actuators.

Invisible interaction begins with microstructures

The principle of digital touch does not arise on the display but on the finger itself. Only when the skin perceives minimal changes in friction, structure or surface pressure does the sensation of a real texture occur. VoxeLite builds on exactly this: The technology transforms movement of the fingertip into machine-readable signals and generates tactile patterns that the brain interprets as real materials.

Before a digital surface is perceived as “rough”, “grainy” or “soft”, a highly precise process takes place in the background. Microactuators change friction within milliseconds, modulate tiny force impulses and create microstructures that adapt seamlessly to finger movement. The fingertip becomes the center of interaction – not the display.

To illustrate this process, the following illustration shows the key elements from which tactile digital textures emerge.

- Microscopic Actuators – tiny friction modulators that increase or decrease friction at precise points.

- Fingertip Resolution – the spacing of actuators is based on the human tactile resolution of 1–2 mm.

- Dynamic Friction Patterns – microstructures change in real time and follow every finger movement.

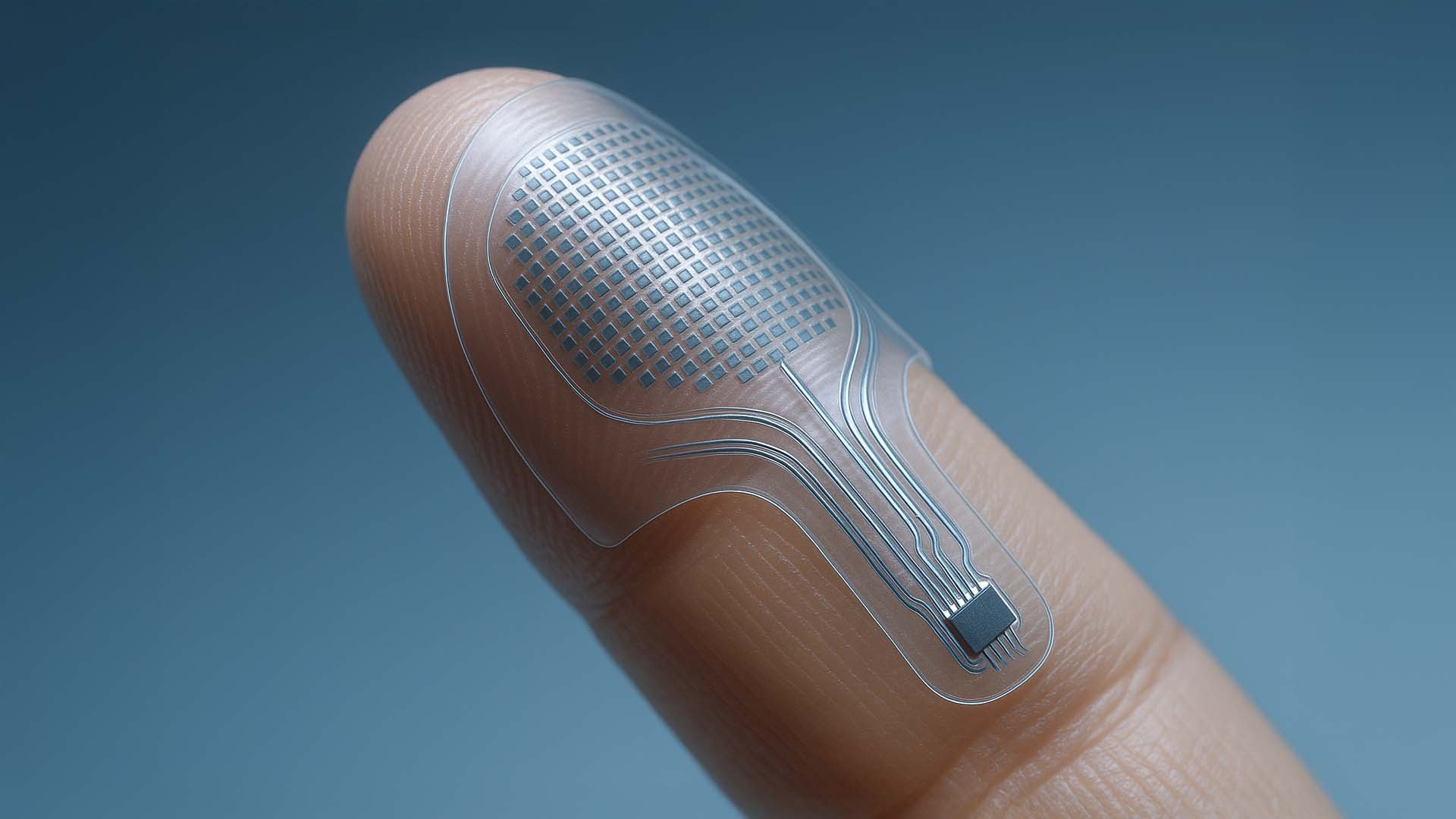

Transparent microactuator layer on the fingertip: The flexible VoxeLite interface reveals a high-density actuator field and integrated ultrathin conductors that precisely modulate friction.

Image: © Ulrich Buckenlei | VISORIC GmbH 2025

The illustration shows an ultrathin, transparent haptic layer that wraps around the fingertip like a second skin. The square microstructure forms a dense field of electrostatic actuators that covers the area where human sensitivity is highest. Fine conductive traces transmit high-frequency signals to a tiny control module – flexible, barely visible yet powerful enough to modify friction within milliseconds. Only this precise fusion of material and electronics makes it possible for flat displays to become tangible.

In the next section, we explore how these microstructural signals merge into full digital textures and why temporal resolution plays a crucial role.

How micro-impulses form complete digital textures

Digital haptics begins at the level of individual microactuators, but it is the temporal sequence of their signals that creates the sensation of a real surface. The human sense of touch does not interpret changes in friction in isolation but as continuous patterns. VoxeLite uses this principle by combining hundreds of micro-impulses per second into structured “texture frames” that adapt precisely to finger movement.

When the finger moves, tiny waves of friction arise on the skin – similar to grain, fiber orientation or fine material ridges. If these impulses change quickly enough, they blend into a continuous tactile impression that feels like an actual physical surface. The technological challenge lies not only in spatial but especially temporal resolution.

To visualize this process, the following infographic shows the key layers from which VoxeLite generates tactile digital textures.

- Micro Actuator Nodes – tiny points that locally and precisely modulate friction.

- Texture Frames – sequences of microstructures generated at up to 800 Hz.

- Somatosensory Interpretation – the interplay of various skin receptors that perceive these patterns as a “surface”.

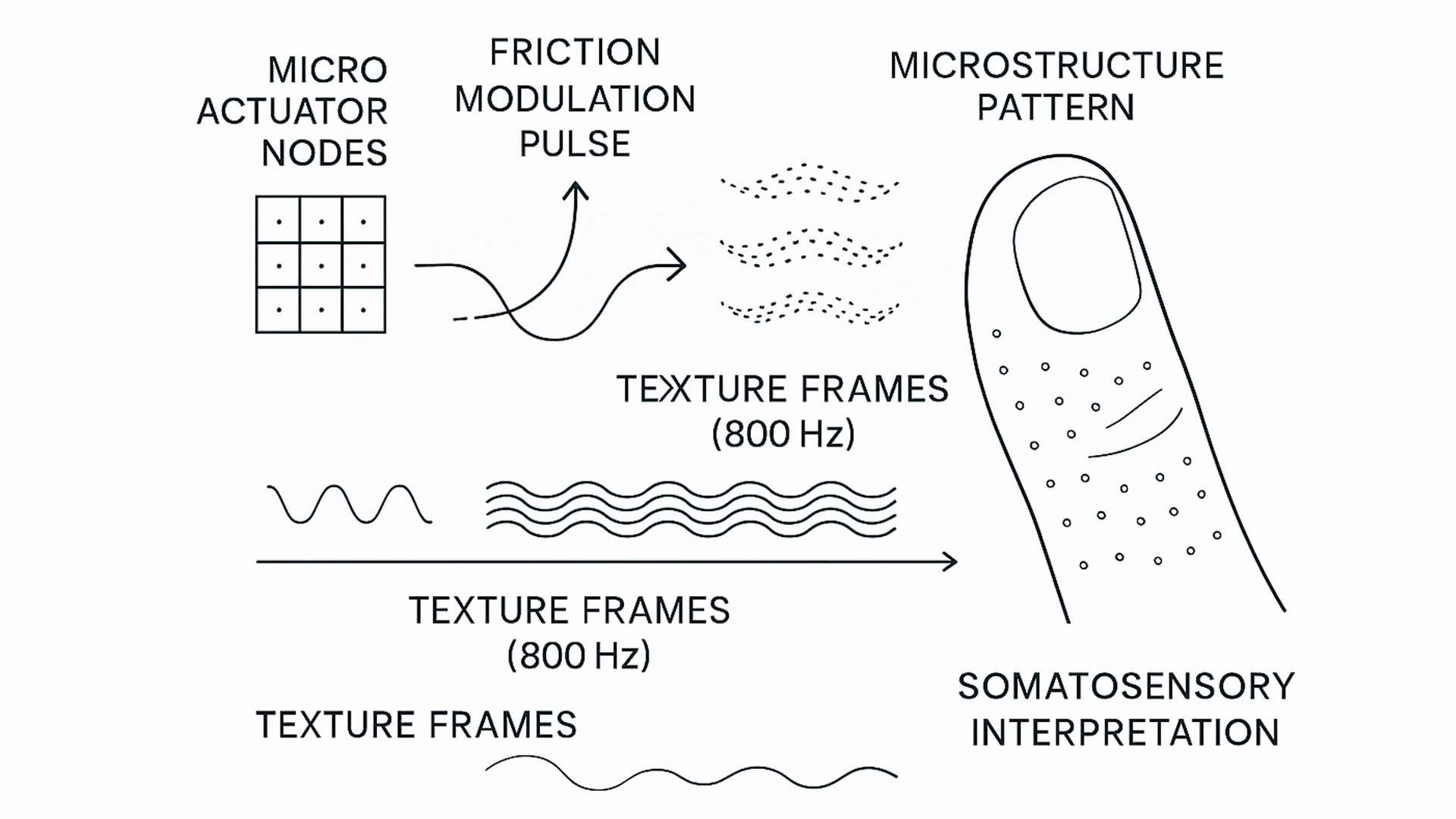

Infographic: How digital textures emerge – from individual microactuators to friction impulses and complete texture patterns interpreted by the human sense of touch as real material properties.

Image: © Ulrich Buckenlei | VISORIC GmbH 2025

The graphic shows a simplified grid of microactuators on the left, forming the foundation of all haptic effects. Each point can minimally increase or decrease friction on the skin. The wavy lines illustrate how individual impulses are modulated before merging into a pattern. In the center, a microstructure emerges: a kind of “haptic frame” reminiscent of grain, texture or directional structure.

Temporal sequencing on the horizontal axis is crucial. VoxeLite produces such frames at up to 800 hertz – fast enough that the brain perceives them not as individual impulses but as continuous material qualities. The right side of the infographic shows a stylized fingertip with dots indicating various mechanoreceptors. These receptors (such as RA1 and SA1) respond to different patterns: vibrations, fine textures or pressure gradients. Only the combination of these signals enables a smooth digital surface to feel like wood, fabric or stone.

In the next section, we go a step further and look at how VoxeLite generates not only static textures but dynamic material effects – such as directional patterns, edges or shifting surfaces that change during finger movement.

When surfaces come alive: Dynamic textures in real time

Static microstructures can already produce remarkably precise material impressions, but the full potential of VoxeLite becomes apparent only when the texture actively responds during finger movement. The sense of touch reacts not only to form but especially to changes: directional shifts, edges, transitions and microscopic force variations. This is where VoxeLite’s dynamic texture generation comes into play. The system modulates friction not as a fixed state but as a continuous pattern renewed every millisecond.

This creates the sensation of “living surfaces”. The haptics change depending on direction, speed and pressure of the finger. A pattern can rise against the movement, run like a groove to the left or change abruptly when crossing a digital “edge”. These effects are not pre-rendered but generated in real time – enabling entirely new adaptive interaction forms for training, design, simulation and accessibility.

- Directional Texture Pattern – tactile patterns that intensify or weaken along a specific direction.

- Edge Modulation Zone – localized friction changes that simulate a clearly defined edge.

- Dynamic Friction Shift – fluid friction variations that adapt continuously to finger movement.

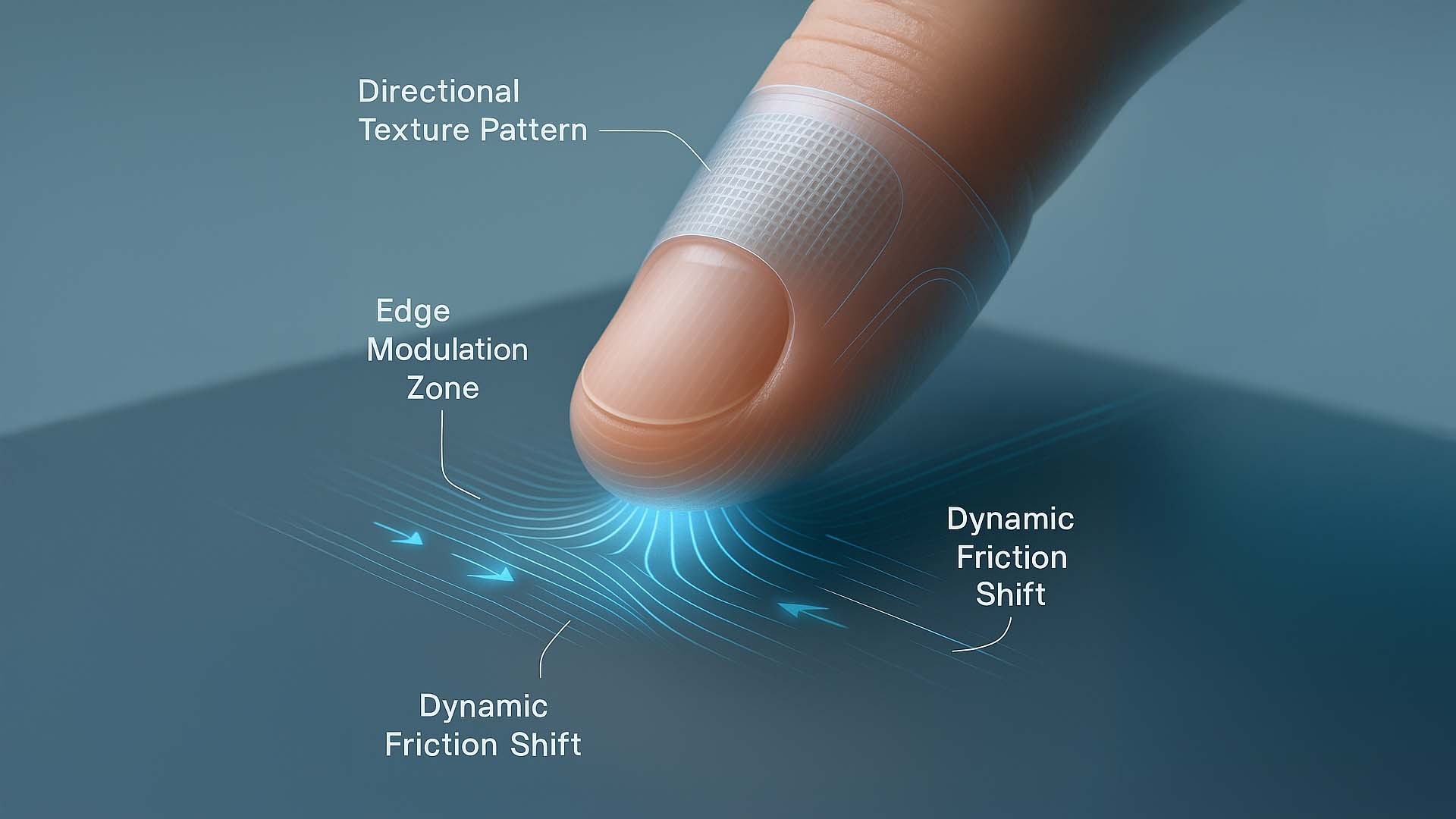

Dynamic haptics in action: The illustration shows how VoxeLite generates directional texture patterns, tangible edges and fluid friction changes during finger movement. The result is a surface that feels alive and context-aware rather than static.

Image: © Ulrich Buckenlei | VISORIC GmbH 2025

In the upper section of the illustration, the Directional Texture Pattern is visible: a fine network of micro-patterns that changes along the direction of movement. These patterns are created by modulated microactuators that selectively increase or decrease friction – similar to the sensation of moving your finger across wood grain or a ridged metal edge.

Below the finger on the left is the Edge Modulation Zone. VoxeLite generates an abrupt tactile change in the friction profile that feels like a real physical edge. The system can place this effect dynamically, for example around objects, buttons or boundaries.

On the right and bottom, wavy lines visualize the Dynamic Friction Shift. These friction variations follow the finger in real time and create a sensation of movement within the surface itself. This gives digital materials a kind of haptic fluidity for the first time – comparable to fabric that shifts when you slide your hand across it or a surface that conveys directional information.

The next section explores the interaction models these findings enable. Research projects like VoxeLite suggest that digital surfaces may one day become not only visible but intuitively tangible.

Future interactions: How tangible interfaces may emerge

The principles demonstrated in VoxeLite show not only how digital textures are formed but also open new possibilities for interactions that extend far beyond classic touch surfaces. When friction, edges and directions become digitally shapeable, displays could theoretically become tangible control panels – without any physical buttons or structures. Research projects like VoxeLite suggest that digital surfaces may one day be not only visible but intuitively touchable.

This perspective fundamentally changes the role of interfaces. Instead of providing purely visual feedback, future systems could convey information through the sense of touch. Buttons could feel like small bumps even though the surface remains flat. Tools such as sliders, dials or selection fields could feature tactile guide lines. Even complex forms like objects, material transitions or resistance zones could be simulated haptically, allowing users to feel structures that do not physically exist.

- Touch Surface Feedback – a surface that remains smooth yet responds with tactile cues as soon as the finger makes contact.

- Virtual Button – a digital control element that simulates a pressure zone or counterforce when pressed.

- Object Interaction – dynamic shapes or tools that adapt their haptic properties to movement and context.

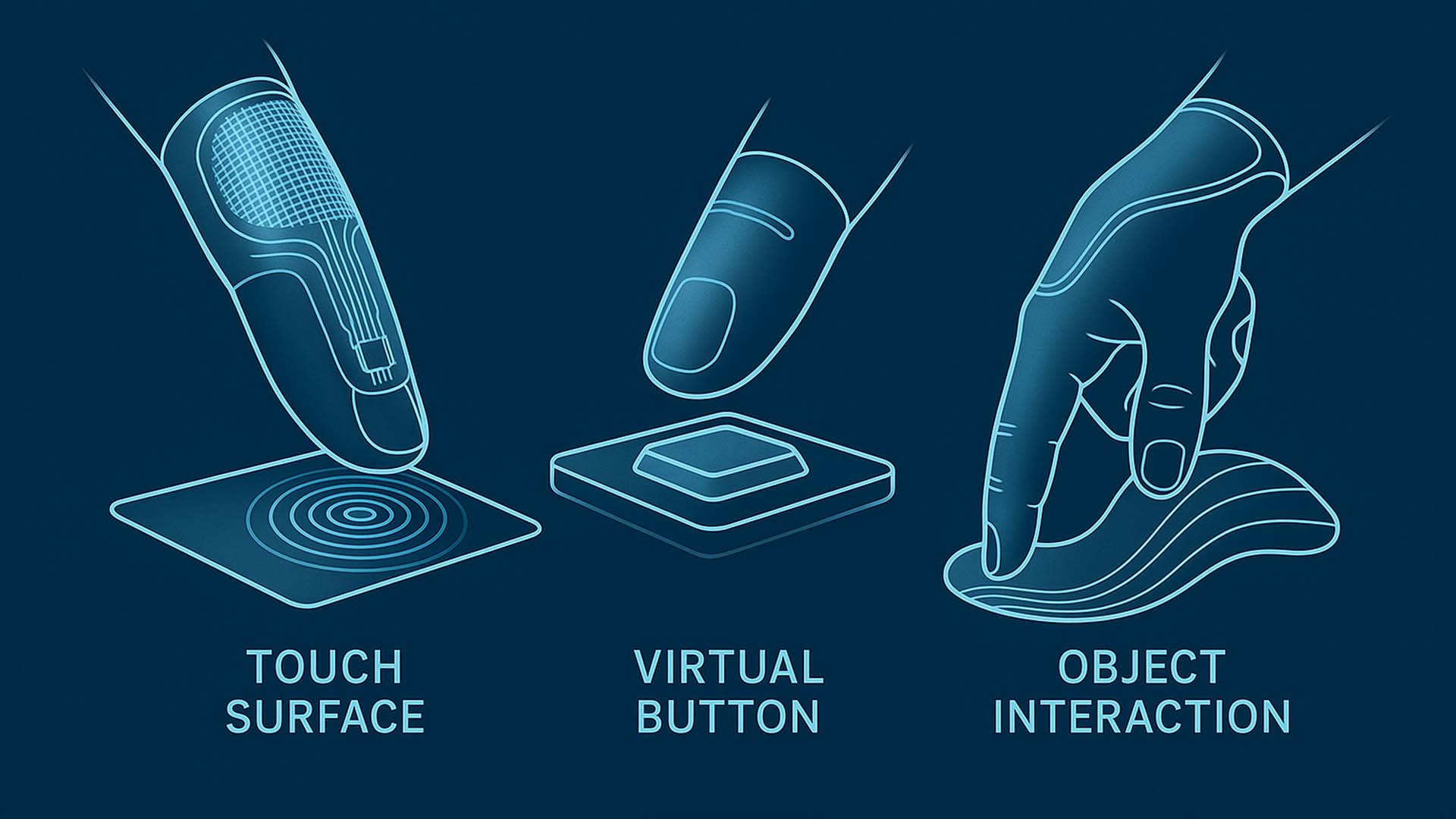

Future haptic interfaces: The graphic shows three fundamental interaction concepts derived from VoxeLite principles – tactile surfaces, virtual buttons and dynamic object shapes that adapt to movement and context.

Image: © Ulrich Buckenlei | VISORIC GmbH 2025

The infographic visualizes three exemplary application scenarios that future haptic interfaces could enable. On the left, the Touch Surface concept shows a smooth surface generating subtle circular waves as tactile feedback when the fingertip touches it. These waves symbolize modulated friction impulses that could feel like a vibrating or textured zone.

In the center is the Virtual Button concept. Although the surface remains flat, a locally intensified friction pattern creates a tactile pressure sensation. The illustration shows how a digital button could feel raised and responsive – essentially a haptic click without mechanical components.

On the right, the graphic shows Object Interaction. A finger interacts with an abstract soft shape representing dynamic, adaptive structures: virtual tools or materials that shift in real time when touched. Such effects could one day allow users to feel shapes, edges, tool traces or even 3D geometries.

The next section explores how these concepts could be extended for training, simulation, accessibility or industrial interfaces that provide not only visual but tactile orientation.

When digital touch opens new application worlds

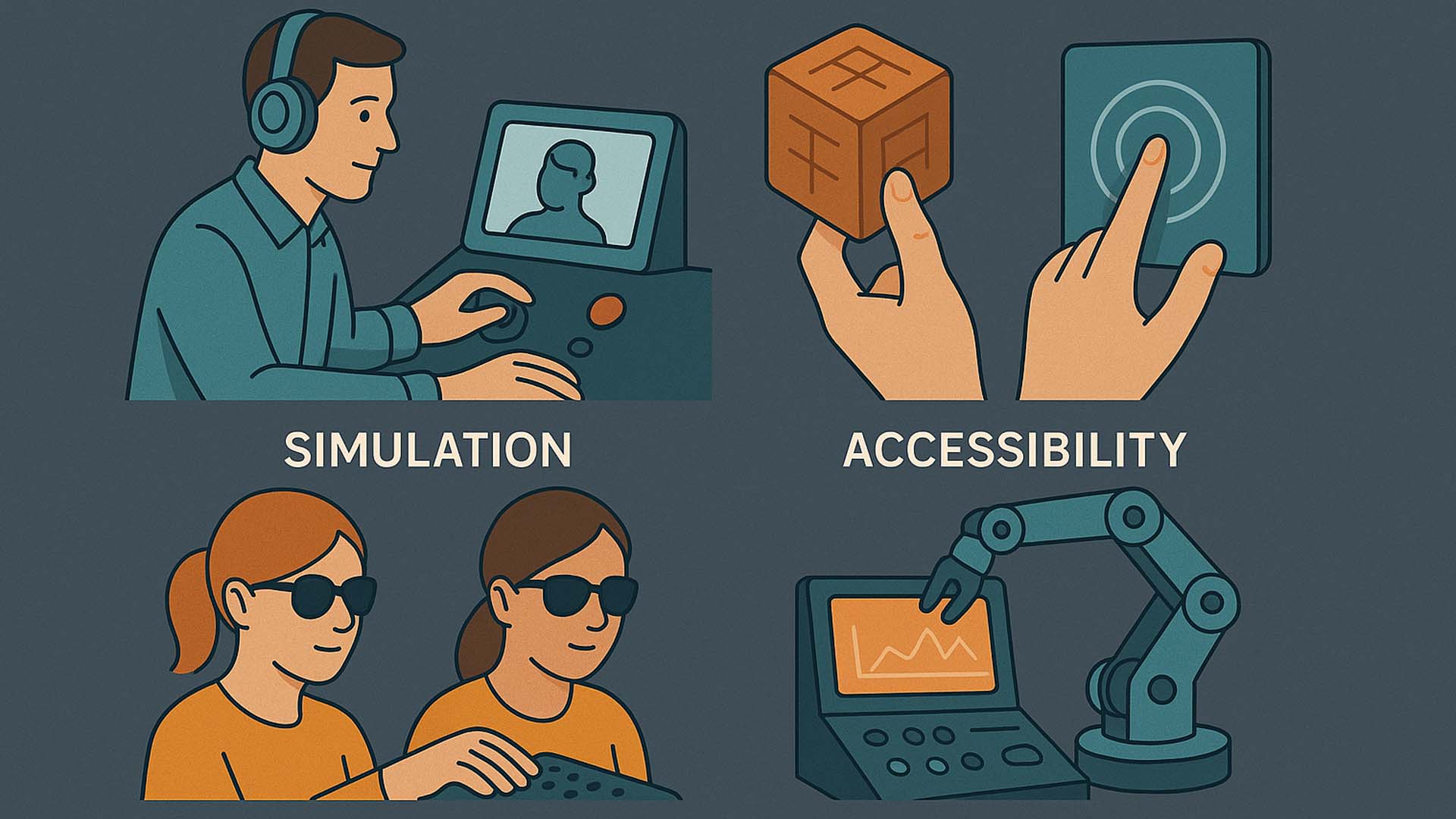

While VoxeLite currently exists primarily as a research prototype, a look at potential fields of application reveals how much potential lies in precisely perceivable digital surfaces. Whether in training, accessibility, simulation or industrial control – wherever humans need to perceive information not only visually but also physically, entirely new forms of interaction become possible. The following illustration summarizes four core scenarios in which tactile interfaces could transform daily life and work in the coming years.

Four future scenarios for haptic interfaces: Simulation, accessibility, training and industrial control. The illustration shows how tactile digital surfaces open new paths of interaction – from realistic learning environments to accessible information systems.

Image: © Ulrich Buckenlei | VISORIC GmbH 2025

The graphic is divided into four distinct areas, each representing a key scenario in which digital haptics provides significant value:

- Simulation – Whether medical education, machine operation or emergency training: real haptic feedback can make learning safer, more precise and more measurable. The illustration depicts a person using a console to perform simulated operations or workflows – supported by tactile parameters such as resistance, texture or vibration patterns. This creates a learning environment close to real conditions yet free of real-world risks.

- Accessibility – For people with visual impairments, tactile digital surfaces could open an entirely new form of information perception. The image shows two users exploring a tactile interface that conveys text, patterns or directional cues. Haptic pixels replace visual elements and make digital content immediately understandable – one of the most promising visions of the next interface generation.

- Training – Digital haptics enables realistic interaction without complex hardware or real materials. From manual skills to surgical movements, tactile simulations form an intermediate step between practice and reality. The illustration shows a haptic training system where users feel virtual objects and learn movement sequences supported by dynamic friction patterns or tangible contours.

- Industrial Interfaces – Precise tactile signals could revolutionize machine operation. The image shows a robotic system whose control panel includes haptic feedback points. Operators could perceive states or warnings not only visually but also physically – an advantage in noisy environments or when visual attention is occupied. Operation becomes more intuitive and robust because relevant information is conveyed directly through the fingertips.

What all these scenarios share is the idea that digital information will no longer be consumed solely visually or auditorily. Instead, it becomes a physical sensation. VoxeLite provides the technological foundation for this: an ultrathin interface capable of representing the smallest signals with high precision. This combination creates a new interaction paradigm – one that does not replace human senses but extends them.

The next section explores how these concepts may evolve when VoxeLite and similar technologies are integrated into applications such as simulation, remote control or accessible information systems. It highlights how tactile interfaces might not only simplify tasks but enable new forms of human perception.

When research becomes technology – VoxeLite’s path into real systems

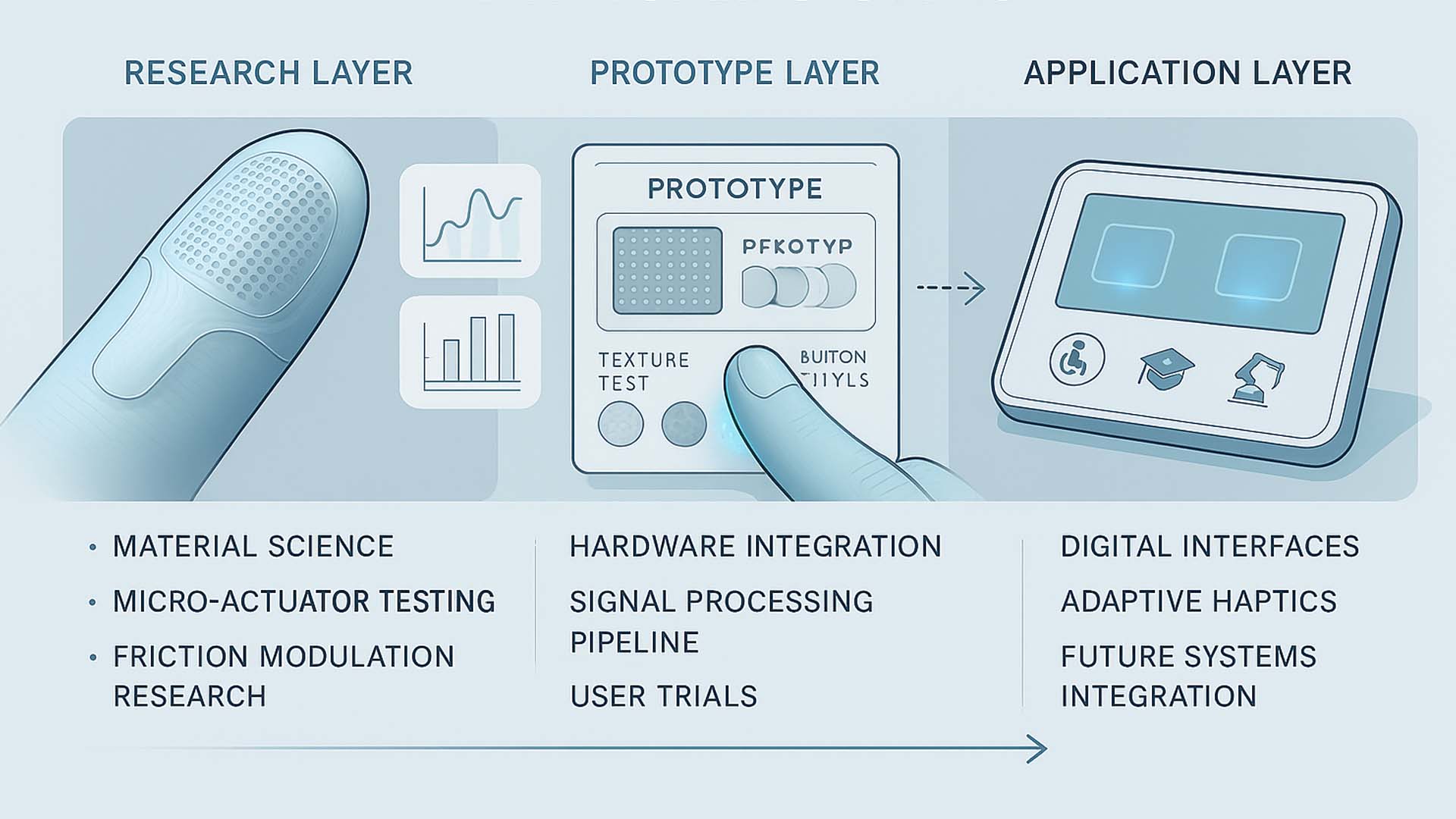

Although VoxeLite still exists in the laboratory, it is already clear which technological steps are necessary to turn an experimental haptic interface into a fully functional interaction system. Development does not proceed linearly but across three coordinated layers: fundamental research, prototyping and system integration. The following infographic shows this evolution – illustrating how individual microstructures can eventually become complete, functional interfaces.

From research to application: The graphic illustrates three developmental stages of haptic technologies – from initial friction experiments (Research Layer) to early test modules (Prototype Layer) to future interfaces where tactile signals are integrated into everyday devices.

Image: © Ulrich Buckenlei | VISORIC GmbH 2025

The illustration divides technological progress into clear layers. On the left is the Research Layer, where microscopic actuators are tested and material properties analyzed. In the center is the Prototype Layer, showing how individual elements are combined into functional test devices. On the right is the Application Layer, representing how tactile interfaces could one day be integrated into real products and work environments.

- Research Layer – This section shows how scientific foundations are established. Finger models, actuator grids and schematic friction diagrams document how precise signals are generated and measured. This is where the fundamentals are created: material tests, friction modulation and understanding which patterns humans can distinguish.

- Prototype Layer – In the middle section, early hardware modules assembled from research components become visible. They enable user studies, latency measurements and testing of signal forms. This is where it is decided whether a technology is repeatable, stable and ergonomic enough to transition into real systems.

- Application Layer – The right section symbolically depicts the future: consoles, control panels and assistive systems that use tactile feedback. Whether in accessibility, industry or training, this layer represents the goal of making digital information not only visible or audible but physically meaningful.

The interplay between these layers shows that VoxeLite is not simply a new interface but a platform technology. It can integrate into a wide range of systems and enable new forms of digital perception. Research provides the building blocks, prototypes make them tangible and applications transform them into user-centered functionality.

The next section explores what perspectives open up when these technological foundations are expanded – for simulation, remote control, accessibility or new forms of sensory communication. It highlights how tactile digital signals could fundamentally change the way humans interact with machines, tools and information.

When AI amplifies haptic signals – adaptive touch as a new interface

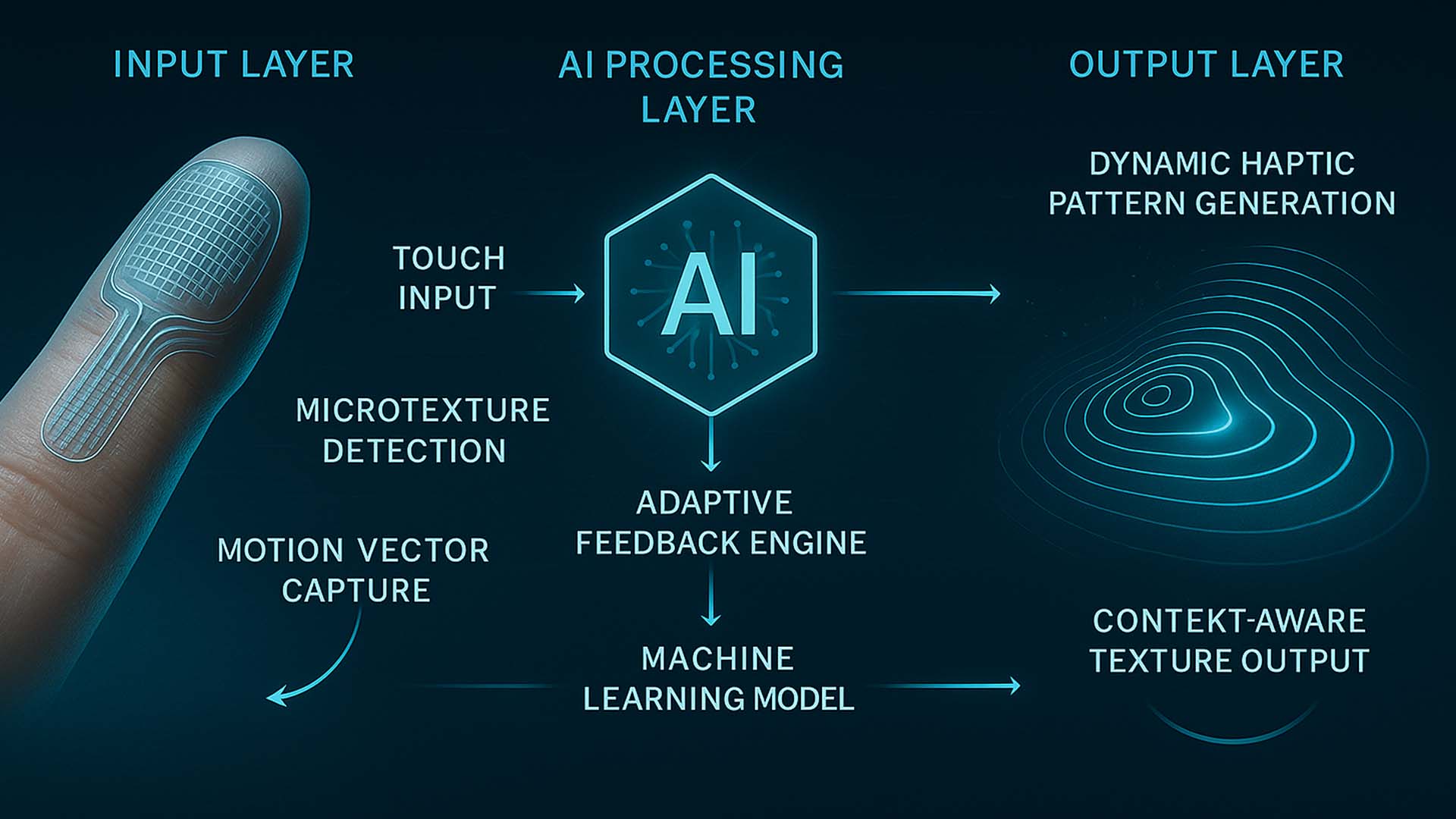

The next evolutionary stage of VoxeLite emerges where haptics meets artificial intelligence. While microstructural actuators generate tactile signals, AI can interpret, adapt and contextually enhance them. A fixed texture becomes a dynamic feedback system that responds to intent, understands movement and interprets touch semantically. The following infographic shows this process in three clearly separated layers – from human input to AI-generated output.

AI-enhanced haptic feedback loop: The graphic visualizes how touch inputs are captured, interpreted by AI and translated into dynamic haptic patterns. On the left, the fingertip with microtexture detection; in the center, AI processing; on the right, the adaptive tactile output.

Image: © Ulrich Buckenlei | VISORIC GmbH 2025

The infographic is divided into three layers that together explain how AI becomes a crucial part of future haptic interfaces. The left side represents the Input Layer, where VoxeLite captures movement, pressure and microstructures at the fingertip. In the center is the AI Processing Layer, analyzing and semantically interpreting these data. The right side shows the Output Layer, where tactile feedback is adapted to context, user intention and environmental factors.

- Input Layer – The graphic shows a finger with a transparent VoxeLite haptic film. The system detects Touch Input, registers Microtexture Detection and captures the exact direction and speed of movement via Motion Vector Capture. This layer is the system’s “sense organ”, providing raw data about user interaction.

- AI Processing Layer – At the center is a hexagonal AI symbol representing machine interpretation of touch. This layer contains algorithms for the Adaptive Feedback Engine and a Machine Learning Model that learns from past interactions. AI identifies patterns, intent and situational changes and decides which haptic pattern to produce next.

- Output Layer – On the right, curved lines represent dynamic tactile signals. The Dynamic Haptic Pattern Generation field stands for synthesizing tactile surfaces, while Context-Aware Texture Output shows how these patterns vary depending on the application – sometimes rougher, sometimes smoother, clearly contoured or subtly guided.

What connects these three layers is a continuous feedback loop: finger movement generates data, AI interprets the data and haptics respond in real time. This loop makes VoxeLite not only a tactile display but an adaptive perception system that understands touch and translates it into meaningful, dynamic feedback.

The next section explores how these principles could expand when AI-supported haptics are integrated into simulation, remote control or industrial assistance systems. It highlights how intelligent touch experiences could extend human capability and enable new forms of digital collaboration.

When digital haptics and AI merge into a learning system

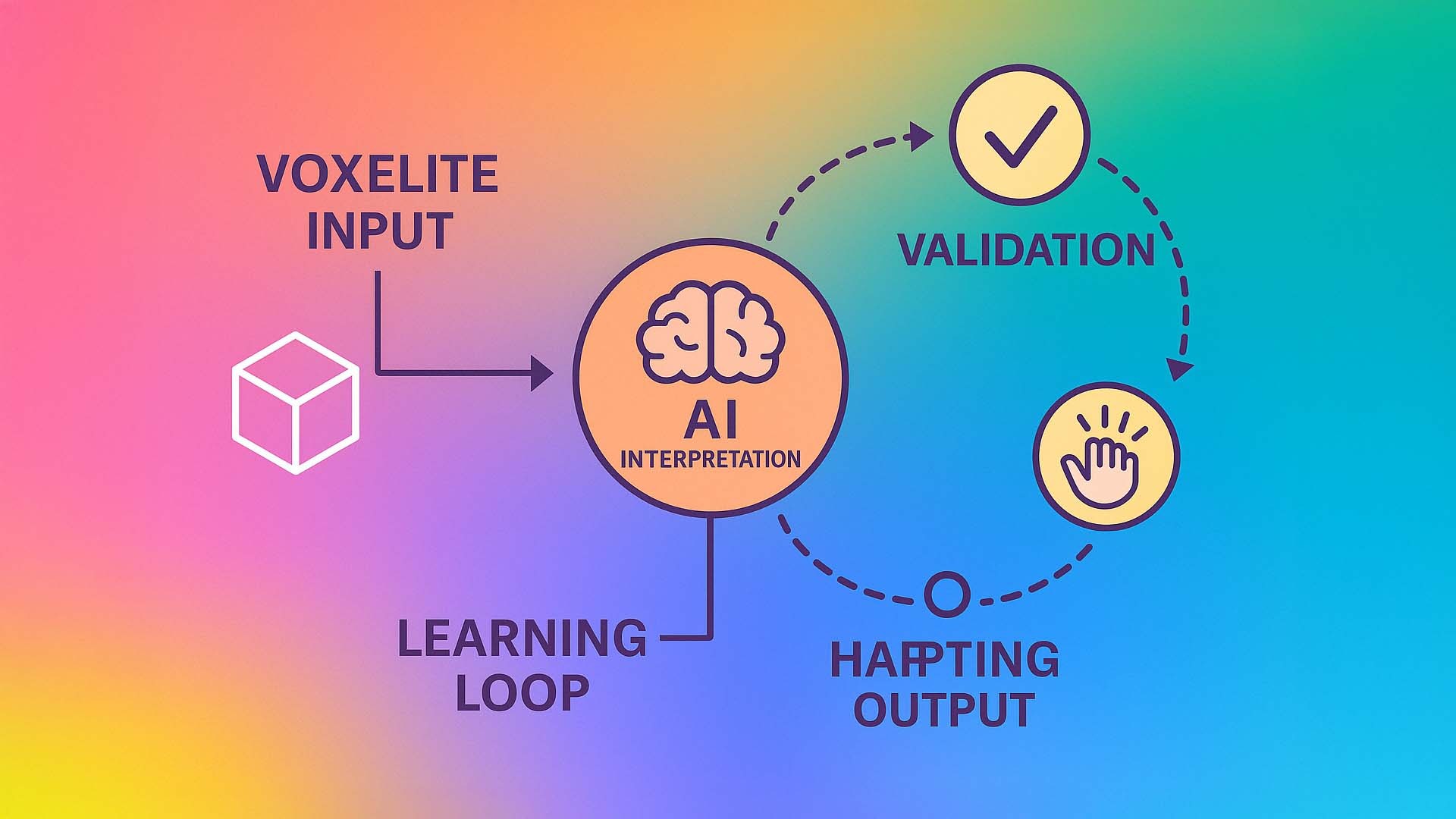

For VoxeLite to not only generate tactile textures but also intelligently adapt to users, it requires a system that not only produces haptic signals but learns from them. Only through the combination of haptic input, AI interpretation, dynamic feedback and continuous validation does a true learning cycle emerge. This principle makes tactile interfaces not only more accurate but increasingly intuitive – similar to systems that “understand” the user over time and refine their responses.

This approach is central to the next generation of tactile technologies. Interfaces that adjust their patterns and intensities based on real interaction open an entirely new spectrum of applications, from personalized UX experiences to intelligent assistance systems that respond situationally and contextually.

Haptic AI learning cycle: VoxeLite input is processed by a neural interpretation model, translated into tactile feedback and then validated by user responses. The illustration shows a closed system of input, AI processing, haptic output and continuous improvement.

Image: © Ulrich Buckenlei | VISORIC GmbH 2025

The image visualizes the four central layers that form a learning-capable haptic interface. On the left, the process begins with VoxeLite Input – the digital object or pattern to be translated into a tactile structure. A simple 3D cube symbolizes any type of digital information.

At the center is the AI Interpretation circle represented by a brain icon. Here, the system decides how the texture must be computed based on sensor data, material profiles and interaction histories: Should it be rougher, softer, more structured or dynamically responsive to finger motion? This step forms the core intelligence of the haptic system.

On the right is the Haptic Output area, represented by a stylized hand icon. Here, the computed information becomes real tactile microstructures. They function as the physical expression of digital content and vary according to context – from fine friction patterns to precise directional cues.

The upper cycle point, Validation, represented by a checkmark, completes the learning loop: Users provide unconscious feedback through their interactions. The system identifies which patterns work well and where adjustments are needed. This information flows back into the Learning Loop at the bottom.

This creates an adaptive system in which every touch improves the interface. VoxeLite becomes not only a static technology but a learning ecosystem that dynamically adapts to a wide range of applications – from gaming to industry to accessibility.

When research becomes visible: VoxeLite in motion

The following video visualizes how ultrathin haptic interfaces generate tangible digital structures based on recent research results from Northwestern University. It shows the functional logic behind VoxeLite: a flexible microactuator field that wraps around the fingertip like a second skin and modulates touch in real time. The scenes demonstrate how microscopic friction patterns emerge, how directional feedback is generated and how dynamic textures are adapted to users by AI.

Although VoxeLite itself is a research prototype, the video provides a glimpse into how digital haptics feels when science and interface design converge. The visualization is based on publicly available scientific findings but has been visually reinterpreted and staged specifically for this article.

Demonstration of the VoxeLite principle: The video visualization shows how microscopic actuators form tactile digital textures – from directional impulses to dynamic friction patterns and reconfigurable surfaces.

Video: © Ulrich Buckenlei | VISORIC GmbH 2025 – inspired by research from Northwestern University

The video shows several key elements: first the ultrathin haptic layer that wraps around the fingertip and forms the basis for all tactile effects. This is followed by microscopic friction patterns that change depending on movement, pressure and interaction context. The visualization also shows how these patterns are analyzed and adapted by AI – a process that plays a crucial role in real research when creating precise and dynamic tactile digital surfaces.

This cinematic representation serves not only as a technical explanation but also highlights the potential of a technology that could one day bridge visual and tactile digital worlds. It shows how the combination of ultrathin actuator technology, physical friction modulation and intelligent signal processing forms the foundation of a new class of haptic interfaces.

The VISORIC expert team in Munich

Digital haptics makes tangible what could previously only be seen. VoxeLite shows how ultrathin technologies can make real textures perceptible, and the Visoric expert team helps companies adopt this innovation early. Our work combines research, software development, interface design and prototyping into solutions that are scientifically grounded and practically usable.

Today, many organizations face the question of how to meaningfully integrate new technologies such as digital haptics, spatial computing or AI-supported interaction into their products and processes.

The VISORIC expert team: Ulrich Buckenlei and Nataliya Daniltseva discussing digital haptics, AI-supported interfaces and future interaction models.

Source: VISORIC GmbH | Munich 2025

This is exactly where Visoric provides guidance with experience, strategic clarity and an interdisciplinary team that makes complex innovation projects understandable, plannable and executable.

- Strategic technology consulting → Analysis of opportunities, potentials and application fields around digital haptics, spatial computing and AI.

- Concept development and prototyping → Creation of proof-of-concepts, UI logics, demonstrators and experimental interfaces that make technical feasibility visible.

- Software development → Implementation of real-time interaction systems, AI pipelines, sensor fusion and customized visualization tools.

- Advanced interface development → Design of 3D interfaces, haptic feedback systems, digital tools and immersive control concepts.

- End-to-end project support → From research analysis to design to production-ready implementation in industry, training, service or simulation.

If you are considering developing new forms of digital interaction, integrating tactile interfaces into products or strategically leveraging the possibilities of AI and spatial computing for your organization, we would be pleased to support you. Even an initial conversation can help reveal opportunities and open the path to clear next steps.

Contact Persons:

Ulrich Buckenlei (Creative Director)

Mobile: +49 152 53532871

Email: ulrich.buckenlei@visoric.com

Nataliya Daniltseva (Project Manager)

Mobile: +49 176 72805705

Email: nataliya.daniltseva@visoric.com

Address:

VISORIC GmbH

Bayerstraße 13

D-80335 Munich