Image: Virtual control interface in a living space

Image: © Ulrich Buckenlei | Visoric GmbH 2025

Invisible interactions become tangible

The next generations of smart glasses and AR contact lenses are fundamentally changing how we work with digital functions. Instead of operating devices, the room itself becomes the interface. Digital content appears exactly where we need it and adapts to our movements. This new logic of interaction makes the boundary between the physical world and its digital extension almost invisible. The hand becomes the tool and the three-dimensional space becomes the interface.

- Space as an interface: Digital functions appear directly in the user’s environment.

- Natural gestures: Movements replace buttons, swiping and controllers.

- Real-time feedback: Virtual elements react instantly to subtle micro-movements.

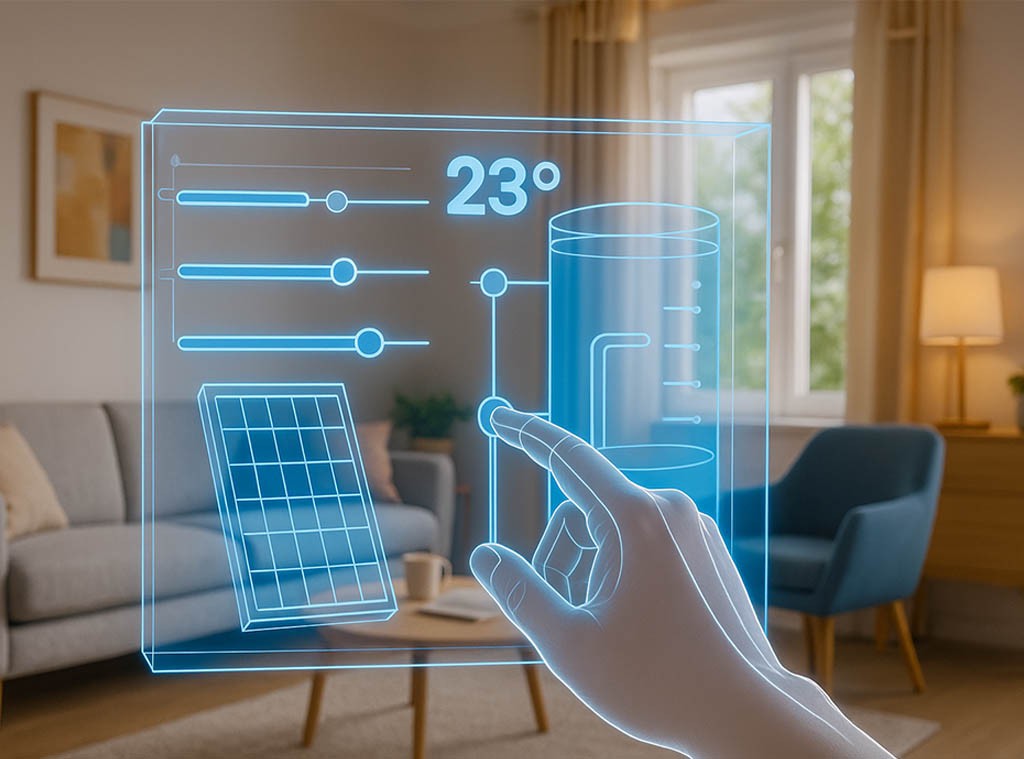

Image: AR interface for home technology through smart glasses

Image: © Ulrich Buckenlei | Visoric GmbH 2025

The approach enables an entirely new form of efficiency. Instead of opening apps, a virtual panel appears exactly where the function is located – for example, a heating control interface directly in the living room while the physical system is in the basement. The user moves their hand through the air and a finely animated interface responds to its position. Settings change through subtle gestures without any physical device being touched.

Such concepts show how seamlessly digital information will be woven into our everyday lives. At the same time, an intuitive work logic emerges that no longer distinguishes between “digital” and “real”. The environment becomes an intelligent companion that reveals functions whenever they become relevant. In the next section, we explore how companies are already using these spatial interactions for design, engineering and product development.

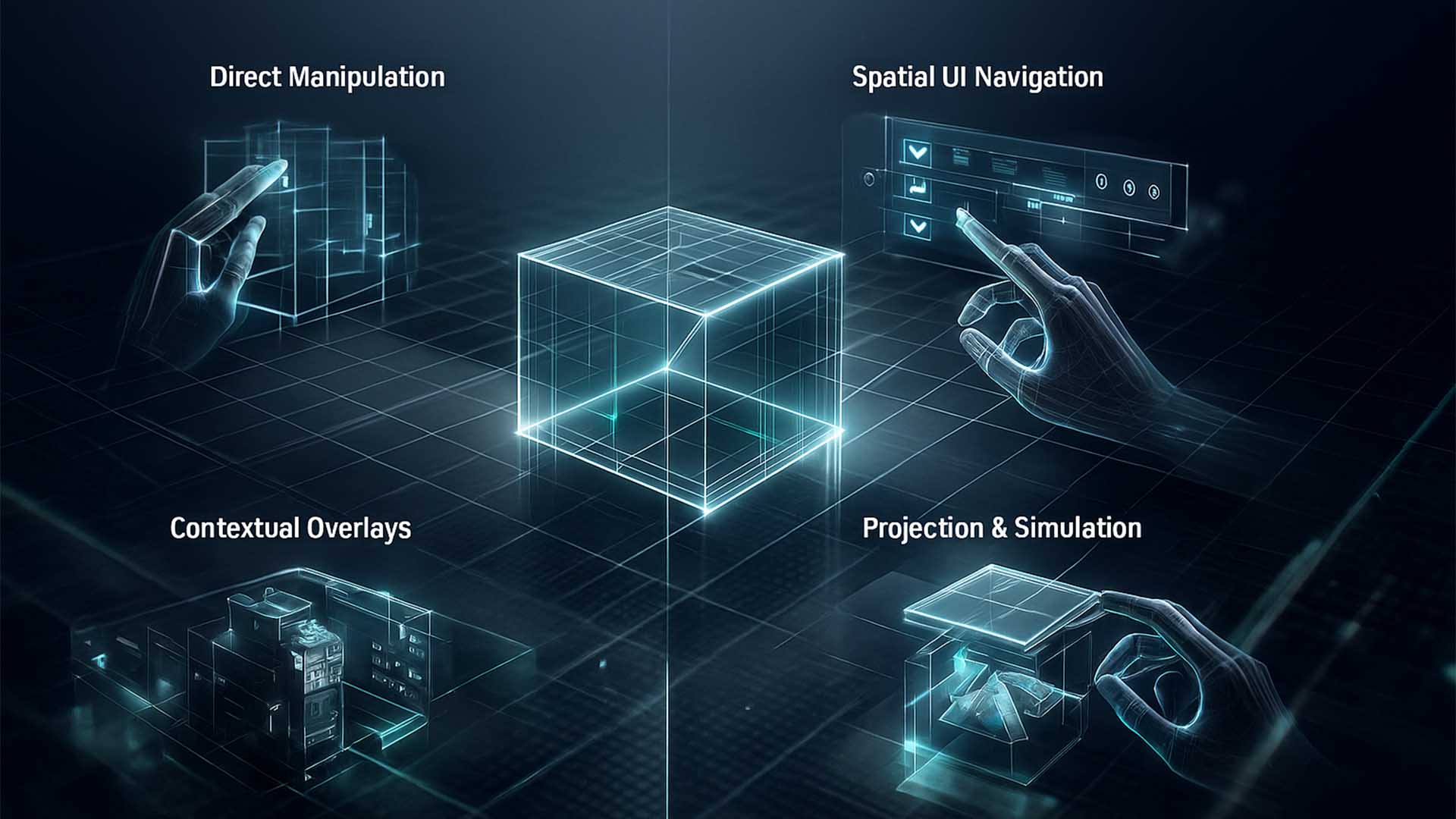

Invisible interactions become visible

The control of digital content in space always begins with a clear question. How does a system know what we want to do before we touch anything. Modern AR interfaces rely on three foundational types of interaction that together form an intuitive and natural user logic. This becomes visible only when the invisible layers of the virtual and real worlds are aligned and we precisely analyze how hands, gaze direction and movement interconnect.

The newly visualized diagram shows these logics in a reduced yet highly precise overview. Four interaction paths are presented and each path represents a fundamental building block of future AR experiences. The graphic uses clear lines, glowing contours and a metallic aesthetic that underlines the technological character. It helps encapsulate abstract principles at a glance and forms the foundation for all further design and development decisions.

- Hand tracking → Movements of fingers and palms are analyzed within milliseconds

- Gesture commands → Defined gestures trigger system actions

- Spatial mapping → The room is mapped and digitally interpreted in real time

- Object anchoring → Virtual objects remain stably anchored in space

Fundamental logics of spatial interaction: The graphic illustrates four typical interaction paths that AR systems use to capture human intent.

Image: © Ulrich Buckenlei | Visoric GmbH 2025

The illustration reveals how these interaction types work together. Hand tracking and gesture control form the physical layer while spatial mapping and anchoring techniques provide spatial stability. These four mechanisms collectively create an interaction world that feels as though digital elements can be moved as naturally as physical objects. In practice, they form the basis of a new work logic that grows beyond traditional screens and integrates directly into our environment.

Dimensional navigation in space

Navigation in future augmented-reality environments will no longer rely on flat menus or two-dimensional surfaces. Instead, interaction moves within volumetric structures that feel like tangible information spaces. Users no longer open content through clicks, but through targeted gestures, spatial movement and intuitive proximity to digital objects. Interaction becomes more physical, more natural and faster because spatial position itself becomes a control parameter.

In everyday use, this leads to entirely new workflows. Content automatically arranges itself around the real environment, floats at different depth layers and reacts to gaze direction and body position. This creates a form of working that feels less like using a computer and more like an expansion of perception. As digital and physical layers merge, a kind of mental movement space emerges that simplifies decisions and accelerates information processing.

- Spatial depth as an interface → Information appears at different distances

- Movement as control → Position, proximity and gaze influence content

- Adaptive information architecture → Space organizes itself dynamically based on the task

Multidimensional navigation: Digital HUD layers arranged in spatial depth, connected by color-coded lines indicating focus, feedback and selection.

Image: © Ulrich Buckenlei | Visoric GmbH 2025

The visualization shows how digital information can be structured across multiple depth layers. A clear focus layer appears in the foreground, recognizing precise gestures and enabling direct interaction. Behind it, semi-transparent information zones present supplementary data without overloading the visual field. Colored lines highlight logical connections between layers and show how decisions or gestures shift content dynamically. The scene illustrates what spatial AR interaction feels like: vivid, intuitive and closely synchronized with the real-world environment.

Adaptive interfaces for different environments

The next generation of virtual workspaces not only recognizes hands, gestures and intentions but also understands the context of the surrounding environment. Whether office, home office or industrial hall, each space has unique acoustics, lighting conditions and movement patterns. Adaptive interfaces incorporate these signals in real time and place controls where they are least disruptive and easiest to reach.

At the same time, these interface layers dynamically adjust to the task. As people move, change modes or encounter new relevant information, the position, size and detail level of virtual elements adapt accordingly. This creates a work logic where the digital layer no longer feels like an extra layer but like an intelligent extension of the physical environment.

- Context awareness → Interfaces react to space, light and movement

- Dynamic placement → Controls move to where they are intuitively accessible

- Situational detail depth → Information density adapts to focus and task

Adaptive virtual controls in three-dimensional space

Image © Ulrich Buckenlei | Visoric GmbH 2025

The visualization shows a floating interface adapting to both the room and the user’s hand. Semi-transparent buttons and sliders align cleanly within the depth of the environment without obscuring real objects. The contours of the hand are highlighted as glowing lines, making visible how real movement and virtual layers interlock precisely. This illustrates how adaptive interfaces make the transition between physical space and digital control logic nearly invisible.

The following section takes a closer look at practical scenarios and how such context-sensitive interactions can simplify and accelerate real workflows.

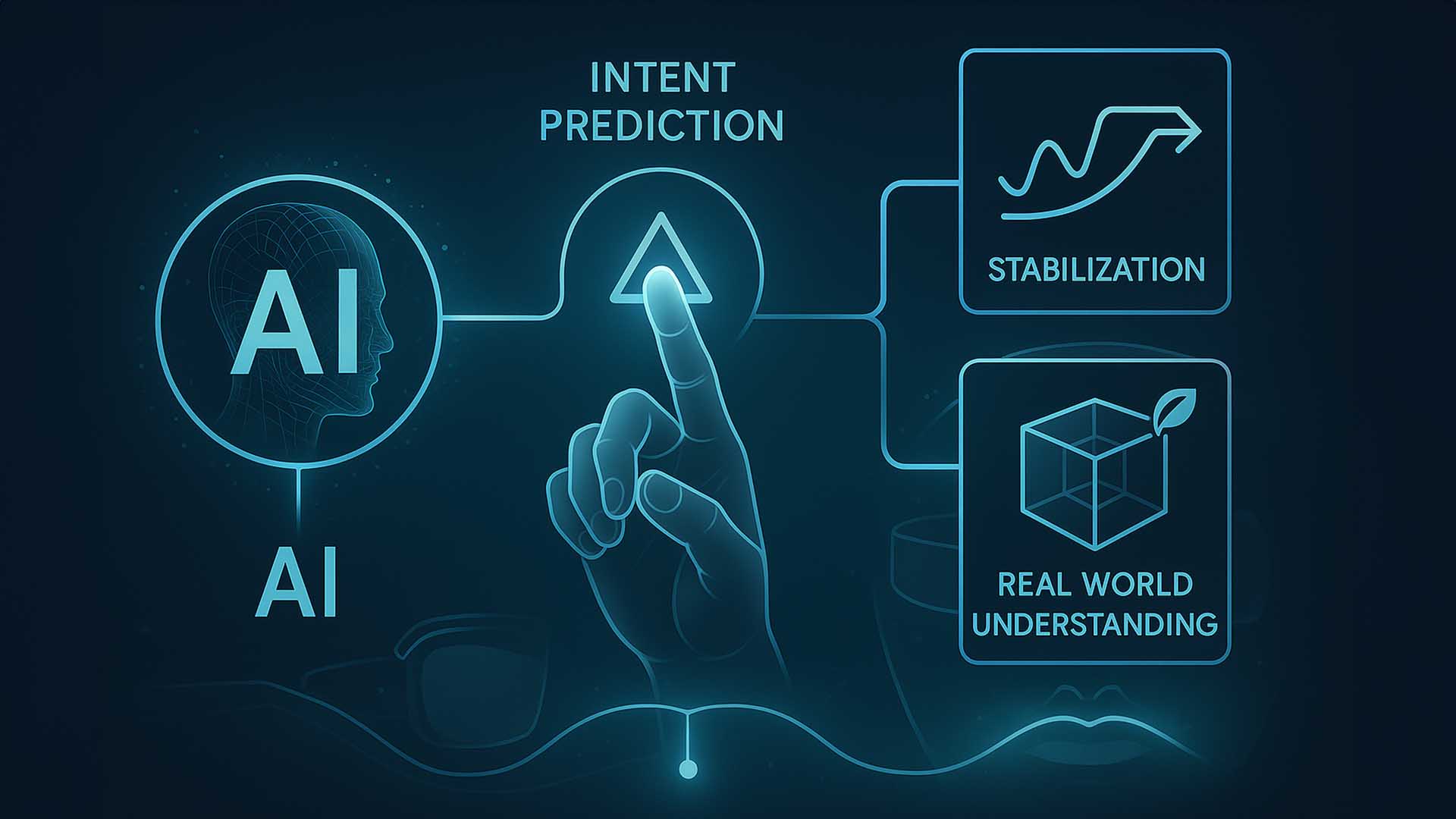

How AI enables invisible interaction

Invisible touch in virtual space does not rely on good sensors alone. True intelligence emerges when AI translates incomplete, fast or complex hand movements into precise control signals. Modern systems detect patterns in micro-gestures, fill gaps in missing data and anticipate user intentions. Interaction therefore feels less like a technical process and more like a natural flow of movement. The following visualization illustrates how multiple AI layers work together to turn real hand movements into a precisely calculated, spatially stable and context-aware interaction.

- Hand movement recognition → AI interprets gestures and intentions in real time

- Spatial analysis → Systems continuously calculate depth, objects and interaction zones

- Adaptive control → Virtual elements dynamically adjust to context and action

Visualization of AI layers: Sensor input, gesture interpretation, spatial mapping and adaptive output work together to make virtual interaction precise and natural.

Image: © Ulrich Buckenlei | Visoric GmbH 2025

The illustration shows the four core layers enabling modern invisible touch interactions. On the left, the process begins with capturing real hand movement. The AI interprets this data on the second layer, recognizing intentions, patterns and micro-gestures. The third layer connects the gesture with spatial context and calculates where virtual objects are located and how they should respond. The fourth layer generates the appropriate output. As a result, the virtual space feels stable, logical and intuitive even when the real movement is imprecise, too fast or incomplete. AI becomes the invisible engine that makes natural interaction in digital 3D space possible.

Invisible interaction in everyday life: How virtual space blends seamlessly with reality

The sixth section takes a broader perspective. After examining gesture control, interaction models, spatial precision and AI processing in detail, this chapter shows how all these technologies merge into a unified experiential space. The key insight is that invisible interaction is no longer a future vision. It emerges wherever digital overlays, intuitive gestures and AI assistants converge to enhance everyday life.

The visual used here illustrates how users will navigate virtual elements through purely intuitive micro-gestures. The real room remains visible while digital layers seamlessly overlay it. The hand acts as a natural interface and is accompanied by a semi-transparent overlay conveying precision and context. The depicted interaction shows how digital and physical information merge seamlessly.

- Seamless fusion → Virtual information lies directly over the real environment

- Intuitive control → Micro-gestures serve as natural input

- Everyday usability → Information, parameters and systems become immediately accessible

Invisible interaction: A real hand controls a digital 3D interface floating in space.

Image: © Ulrich Buckenlei | Visoric GmbH 2025

The image shows a hand interacting with a floating holographic 3D cube containing an AI core. The semi-transparent lines of the structure convey both depth and precision. The background remains a real living room, darkened to draw focus to the digital overlay. The scene demonstrates how an intuitive finger movement is enough to control parameterized objects, settings or entire systems. It becomes clear how invisible touch will function in everyday spaces and how natural this interaction can feel.

Video – Interaction in three-dimensional space in motion

The following video excerpt shows an early yet impressive functional model of spatial interaction. The recording demonstrates how digital structures can be grabbed, rotated, scaled and reorganized in real time using only natural hand movements. Every gesture takes immediate effect in the virtual space and makes the process intuitive and precise.

The special aspect of this demonstration is the clarity with which digital elements feel: They do not merely float in front of the user but respond to the same motor reflexes familiar from the physical world. This creates an interface not perceived as a tool but as a natural extension of one’s hands.

Spatial Creation Prototype – Fully gesture-driven manipulation of digital structures

Video: Prototype by Oleg Frolov · Commentary by Ulrich Buckenlei · Fair use for analytical purpose

The video clearly shows how the digital structure reacts in real time to every rotation, shift or scale adjustment. Individual modules are deformed, moved or recomposed without any visible controls. Everything happens directly in three-dimensional space – where complex models, interfaces or industrial processes may be created in the future.

This demonstration illustrates how creativity will change once interaction becomes fully spatial. Creation will no longer take place on a screen but within the room itself. And the combination of precise gestures and adaptive AI forms the foundation of a new generation of digital tools.

The Visoric Expert Team in Munich

The concepts presented here are developed in an environment where research, creativity and technological precision consistently converge. Our team in Munich has been working for many years to advance the possibilities of spatial interaction and make them usable for companies. We investigate how people will interact with digital worlds in the future and how intelligent systems can support these interactions seamlessly.

Our expertise includes AI-based interaction models, spatial computing workflows, volumetric user interfaces and immersive design processes. In close collaboration with research partners and leading global companies, we create solutions that combine technical depth with practical applicability. The goal is always to prepare complex future technologies so that they create real value today.

- Strategy and concept → Development of future-proof spatial interaction models

- Design and content → Precise 3D visualizations and interactive spatial workflows

- Technical implementation → AI-supported interactions, motion intelligence and XR systems

The Visoric Expert Team: Ulrich Buckenlei and Nataliya Daniltseva

Source: Visoric GmbH | Munich 2025

If you are considering developing your own concepts for spatial interaction, AI-supported workflows or immersive product development, we are happy to support you. Often a first conversation is enough to reveal new potential and define concrete next steps. Whether for prototypes, innovation projects or long-term digital strategies, our team is ready.

Contact Persons:

Ulrich Buckenlei (Creative Director)

Mobile: +49 152 53532871

Email: ulrich.buckenlei@visoric.com

Nataliya Daniltseva (Project Manager)

Mobile: +49 176 72805705

Email: nataliya.daniltseva@visoric.com

Address:

VISORIC GmbH

Bayerstraße 13

D-80335 Munich