AI in the Cockpit – from static to adaptive

Visualization: Ulrich Buckenlei I Visoric GmbH

The cockpit of the future thinks along. Artificial intelligence not only changes how displays look but also how they react to the driver and the situation. What were once rigid control concepts with buttons, rotary knobs, and fixed menu structures are evolving into highly adaptive, learning interfaces that respond to voice, gestures, eye movements, and context.

AI as a Gamechanger in Cockpit Design

Traditional user interfaces in cars followed fixed design patterns for decades. With the integration of AI, control elements can now be dynamically designed. Systems adjust their arrangement, priority, and display mode in real time to the context and needs of the driver – transforming the interface from a static instrument into a thinking assistant.

- Adaptive Layouts → Adapt to driving mode, weather, and surroundings.

- Predictive Interfaces → Suggest functions before they are needed.

- Ergonomics through AI → Take individual preferences and habits into account.

AI as a Gamechanger in Cockpit Design

Visualization: Ulrich Buckenlei I Visoric GmbH

With AI, cockpits become context-sensitive – content follows situation and need.

This transformation lays the foundation for a new form of human-machine interaction: the vehicle understands the driver – not only what they touch but also what they need.

Intelligent Interaction: Understanding Context Instead of Searching Menus

AI not only detects the driving state but also the behavior and attention of the driver. Using eye tracking, sensor data, and driving style analysis, content is prioritized. Irrelevant displays step back, safety-relevant signals move to the foreground – distraction decreases, clarity increases.

- Eye Tracking → Information weighting based on gaze.

- Attention Model → Dynamic prioritization depending on the situation.

- Minimal Distraction → Smart reduction when the driving task is demanding.

AI as a Gamechanger in Cockpit Design

Visualization: Ulrich Buckenlei I Visoric GmbH

The system knows when information helps – and when it distracts.

With this understanding, interfaces can be tested and optimized more precisely – without immediately building physical prototypes: the next step is virtual testing.

Virtual Test Environments: Simulation Instead of Production Prototype

VR and MR headsets enable realistic cockpit simulations – with changing lighting conditions, weather scenarios, and traffic situations. Teams check readability, interaction paths, and safety before the first component is manufactured. Iterations become faster, risks decrease.

- VR/MR Cockpits → Early, immersive tests in context.

- Scenario Variety → Day/night, city/highway, rain/fog.

- Time-to-Decision → Shorter cycles, more informed decisions.

AI as a Gamechanger in Cockpit Design

Visualization: Ulrich Buckenlei I Visoric GmbH

Virtual tests reveal early on what must later work intuitively in the vehicle.

Virtual testing paves the way for highly realistic visualizations – making the interface’s behavior tangible before production.

Highly Realistic 3D Visualizations: Seeing Behavior Before It Exists

Photorealistic 3D renderings and animations show not only how the interface looks but also how it reacts: motion states, transitions, feedback. This makes design options tangible, discussions fact-based – and accelerates collaboration between UX, engineering, and management.

- Render & Motion → Visualizes states, transitions, micro-interactions.

- Design Alignment → Common language for UX, tech, and business.

- Decision Readiness → Fewer assumptions, more evidence.

Seeing Behavior Before It Exists

Visualization: Ulrich Buckenlei I Visoric GmbH

Visualizations make UX details visible – before hardware exists.

But interfaces only become truly adaptive when real usage feeds back: digital twins link virtual cockpits with real sensor data.

Digital Twins: Learning from Real Use

Digital twins connect the virtual cockpit with real-world data. AI learns which displays have the highest relevance in which situation – and continuously optimizes the logic. Insights flow back into the vehicle via updates: the interface matures in use.

- Virtual Cockpit + Real Data → Relevance instead of overload.

- Continuous Improvement → Updates based on actual usage.

- Safety Gains → Better timing, clearer cues, less distraction.

Digital Twins: Learning from Real Use

Visualization: NVIDIA

The digital twin turns the cockpit into a learning system.

This cycle creates a development logic that is faster and more precise – and forms the basis for experiential learning in motion pictures.

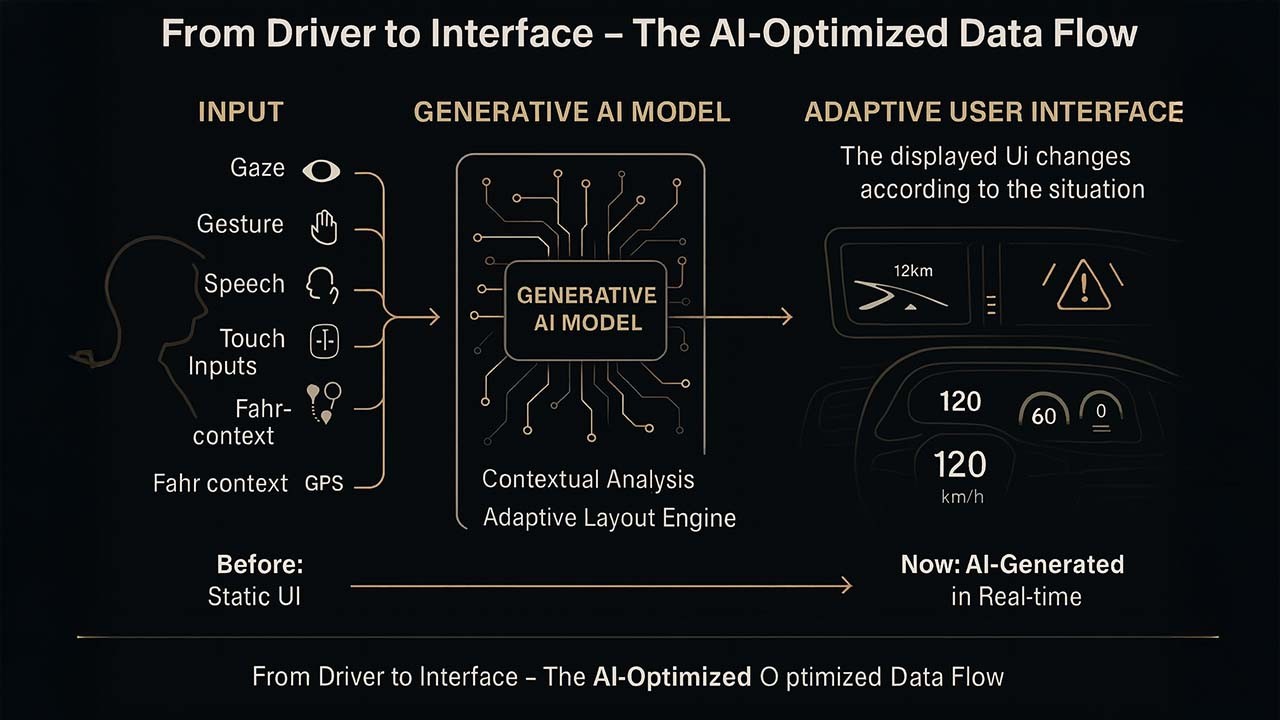

From Driver to Interface – the AI-Optimized Data Flow

The graphic shows how modern vehicles capture driver inputs – from gaze direction to gestures, as well as voice and touch commands plus driving context data – in real time and process them through a generative AI model. The result is an adaptive user interface that adjusts to the situation, enhancing safety, comfort, and usability.

- Multimodal Inputs → Combination of gaze, voice, gesture, and touch control plus driving context.

- Generative AI Model → Context analysis and real-time adaptive layout creation.

- Adaptive UI → Dynamic prioritization and display depending on the situation (e.g., navigation, warnings, efficiency).

From Driver to Interface – the AI-Optimized Data Flow

Infographic: Ulrich Buckenlei I Visoric GmbH

This way, drivers receive exactly the information that is relevant at that moment – in the right level of detail and format, without additional cognitive load.

Video: The Adaptive Cockpit in Action

“AI Design Showdown: Vercel vs Figma Make”

Video source: Original video by Blueshift

A look at the live behavior of an AI-powered cockpit.

The video shows how content is prioritized in real time, how gaze, voice, and touch work together – and how the interface adapts to changing driving conditions.

Contact Our Expert Team

The Visoric Team supports companies in implementing intelligent interfaces, immersive exhibits, and physical-digital real-time systems – from concept development to production-ready integration.

- Technical feasibility studies: tailored, realistic, solution-oriented

- Concept & prototyping: from data source to finished surface

- Integration & scaling: for showrooms, trade fairs, development, or sales

Get in touch now – and shape the future of interaction together.

Contact Persons:

Ulrich Buckenlei (Creative Director)

Mobile: +49 152 53532871

Email: ulrich.buckenlei@visoric.com

Nataliya Daniltseva (Project Manager)

Mobile: +49 176 72805705

Email: nataliya.daniltseva@visoric.com

Address:

VISORIC GmbH

Bayerstraße 13

D-80335 Munich