Spatial interaction in an industrial environment: A hand controls a volumetric 3D interface that reacts to movement in real time.

Image: © Ulrich Buckenlei | VISORIC GmbH 2025

Invisible interaction begins with movement

The moment a space understands what a person intends to do before they touch anything, the logic of work changes fundamentally. Systems that interpret movements in space as machine-readable signals reduce friction, accelerate processes, and create new possibilities for safety, quality, and efficiency. For companies, this means fewer rigid user interfaces and more direct interaction with machines, equipment, and information – precisely where decisions are made.

Before interfaces become visible, before a command is triggered, a process begins that is based on subtle movements: a finger lifting, a hand rotating, a slight shift in distance. These micro-gestures form the foundation of a new interaction model that works without traditional screens, buttons, or devices. Modern AI systems understand these movements not only technically but semantically. They interpret why the hand moves in this way, what purpose the gesture serves, and what intention lies behind it. A simple movement becomes a structured signal – and the room becomes an intelligent partner.

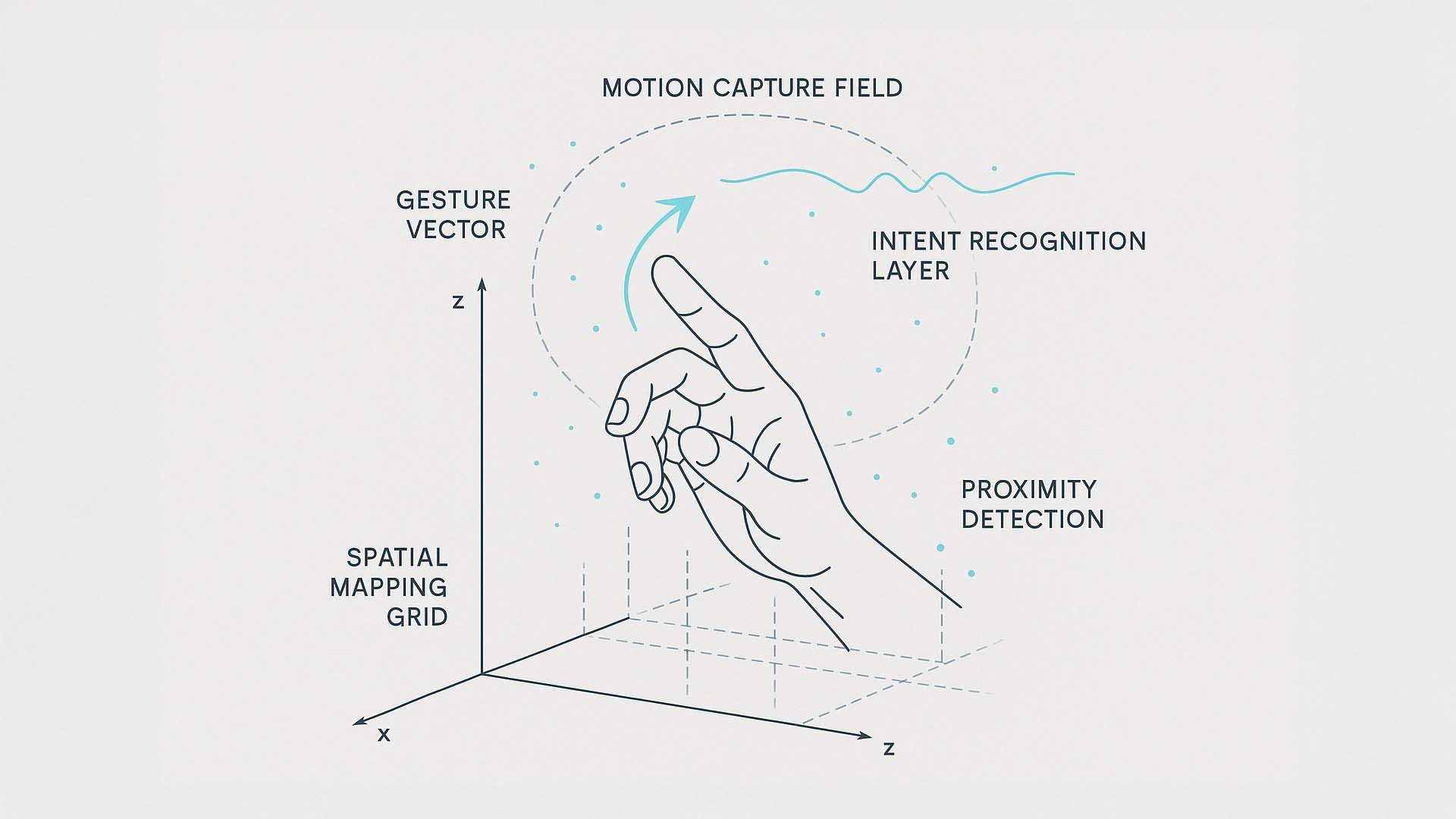

This principle forms the basis of many new technologies in spatial computing and motion intelligence. To illustrate this process, the following graphic shows the key elements from which invisible interaction emerges.

- Movement as language – micro-gestures become semantic signals that systems can directly understand.

- The room as a sensor – the environment detects position, depth, and dynamics of users.

- Real-time interpretation – AI connects movement with intention and triggers appropriate actions.

Technical illustration of invisible interaction: The hand movement is analyzed within a spatial coordinate system. Terms like Gesture Vector, Motion Capture Field, and Intent Recognition Layer show how AI transforms movements into understandable signals.

Image: © Ulrich Buckenlei | VISORIC GmbH 2025

The drawing visualizes how an AI-based system analyzes and interprets human gestures in three-dimensional space. The Gesture Vector indicates direction and dynamics of the gesture; the Motion Capture Field defines the volumetric field in which movements are captured. In the Intent Recognition Layer, AI translates this data into meaning, recognizing patterns and intentions. Proximity Detection determines how strongly an element reacts to the hand’s approach, while the Spatial Mapping Grid handles spatial positioning. Only the interaction of these layers enables the space to understand behavior and react to it in real time.

In the next section, we take a step closer to practical application and examine how these foundations evolve into concrete interaction models where gesture, depth, spatial flow, and AI interpretation merge into one continuous movement stream.

How movement, depth, and spatial logic merge into one interaction model

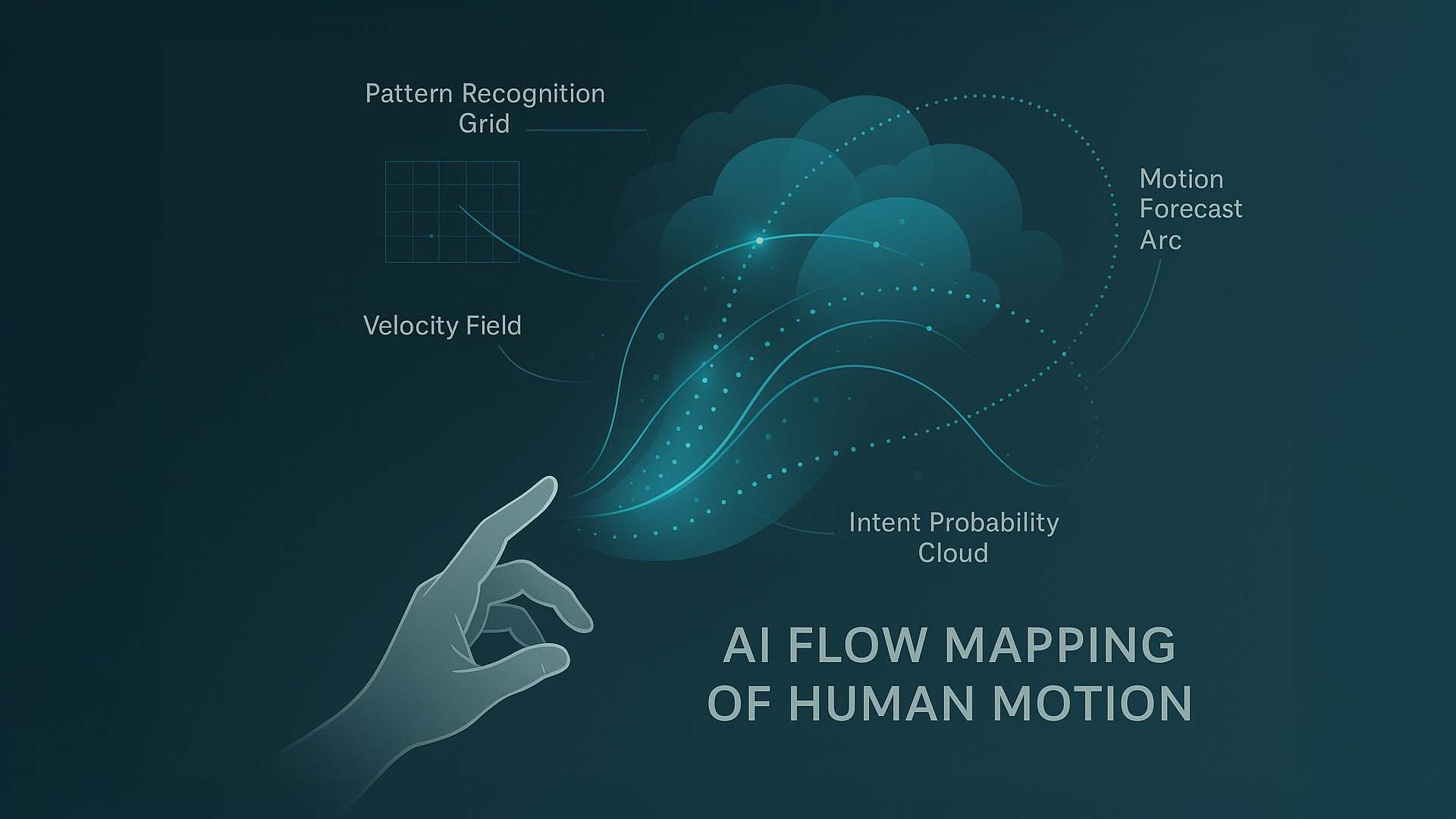

Movements in space do not consist of a single component. Only when gesture, speed, depth, position, and spatial trajectory are captured and interpreted together does a movement stream emerge that is readable for AI systems. In modern spatial-computing environments, the technology not only detects where a hand is moving, but also how fast, how deep, in what pattern, and with what probability a specific action is intended. From this combination emerges an interaction model that no longer feels like operating a system but like a natural flow of movement.

To make this logic visible, the following visualization shows how several layers interact simultaneously. It combines physical gesture, data fields, pattern analysis, and movement prediction into a single dynamic AI flow. The image reveals how AI turns an apparently simple finger movement into a multidimensional control signal.

- Multidimensional capture – gesture, depth, speed, and trajectory interact.

- Data-based interpretation – AI recognizes patterns and probabilities.

- Flowing movement stream – multiple layers merge into a single interaction model.

AI Flow Mapping of Human Motion: The visualization shows how AI simultaneously breaks down motion data into patterns, speed, spatial depth, and predictions. Terms such as “Pattern Recognition Grid,” “Velocity Field,” “Intent Probability Cloud,” and “Motion Forecast Arc” illustrate how a single gesture becomes a flowing movement stream.

Image: © Ulrich Buckenlei | VISORIC GmbH 2025

The image shows a hand at the bottom left, its finger movement acting as the starting point. Above the hand unfolds a technical-aesthetic data stream of light traces, points, and semi-transparent layers. Each layer represents one component of AI analysis:

Pattern Recognition Grid – a grid field that recognizes the movement pattern and compares it with known interaction sequences.

Velocity Field – a data field that measures the speed and acceleration and derives meaning from it.

Intent Probability Cloud – a semi-transparent “probability cloud” in which AI calculates which action the hand is most likely trying to trigger.

Motion Forecast Arc – a predicted trajectory showing how the hand will continue to move in the next milliseconds.

The visualization demonstrates that modern interaction systems do not react to a single movement but to an entire context. From many data points emerges a continuous movement stream that remains intuitive for humans yet becomes precisely readable for machines. In the next section, we examine how these interaction logics unlock new design possibilities in real environments – such as offices, production halls, or training systems.

How interaction logic transforms real spaces

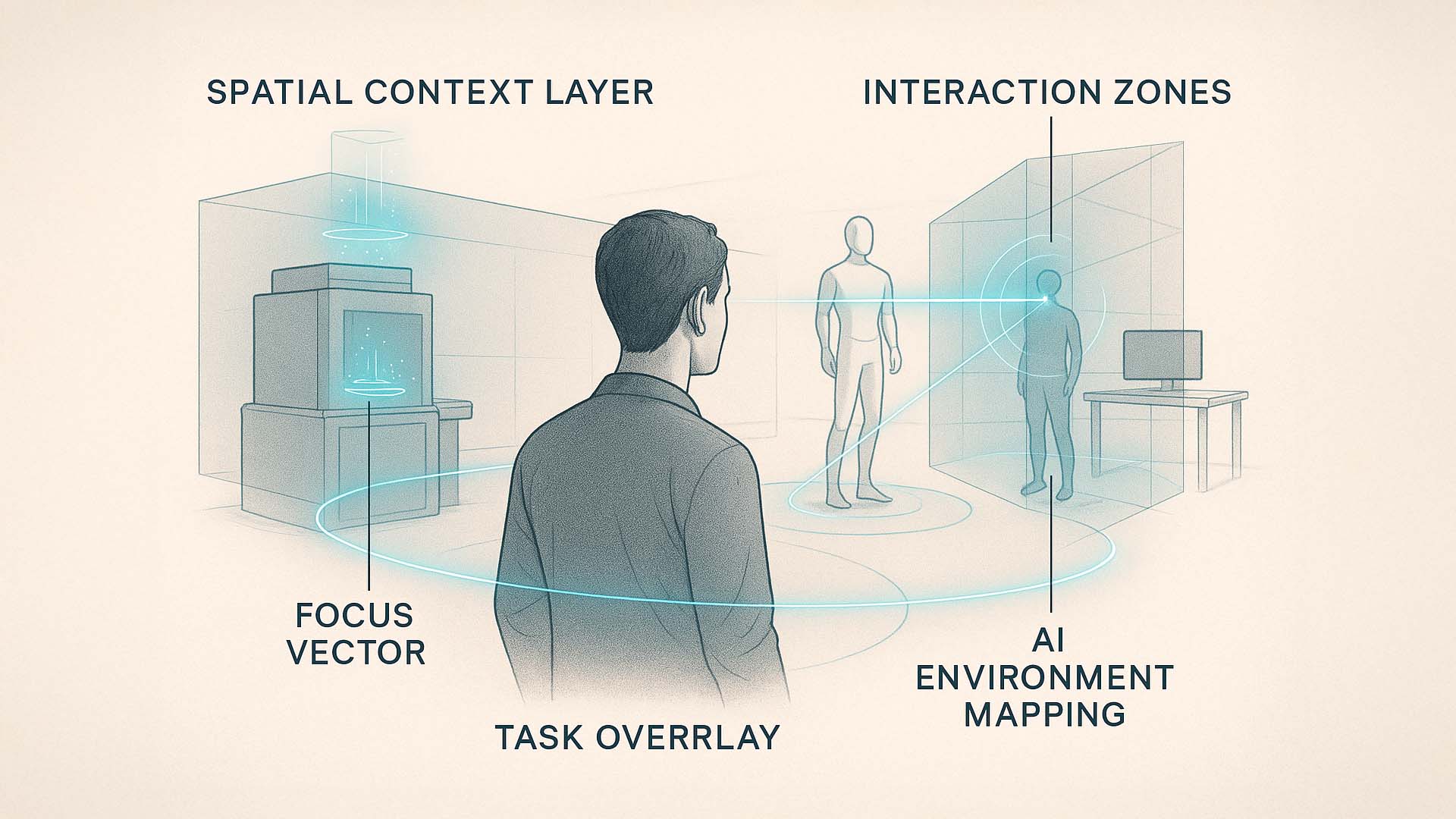

When movement, depth, spatial zones, and AI interpretation interact, a new type of workspace emerges: spaces that understand what people want to do before a traditional interface appears. The following examples show how such interaction logics are already being used in offices, production areas, and training environments today. Instead of rigid controls, context-dependent information layers emerge that dynamically adapt to location, task, and user movement.

Modern systems interpret gaze direction, position, distance, and movement patterns in real time. This creates a workspace that puts information where it is needed instead of forcing people to search for tools or displays. This development leads to more efficient decisions, a more natural operating logic, and an environment that actively supports the user.

- Space as information architecture – content appears exactly where the task originates.

- Context-dependent overlays – machines, objects, and workstations generate dynamic overlays.

- Movement-based prioritization – the space recognizes relevance through proximity, gaze, and action.

Context-Aware Spatial Workspace: The illustration shows how AI interprets real space and creates interaction zones. Elements like “Spatial Context Layer,” “Interaction Zones,” “Task Overlay,” “Focus Vector,” and “AI Environment Mapping” make visible how spaces begin to understand behavior.

Image: © Ulrich Buckenlei | VISORIC GmbH 2025

The image shows a user in a real environment – a mixture of workspace and interaction zone. Around them several technical layers illustrate how AI analyzes the situation. The Spatial Context Layer forms a semi-transparent spatial plane showing which areas of the space are currently relevant. The Interaction Zones mark areas where movements or gestures have special meaning. Over an object appears a Task Overlay, a small data field displaying context-relevant information such as operating instructions, status, or safety notes.

The Focus Vector connects the user’s gaze or orientation with a specific object, showing how AI identifies what the user is paying attention to. Additionally, AI Environment Mapping visualizes the system’s ability to scan and understand the environment and derive predictions from it. This creates a work environment that does not react but anticipates.

This scene exemplifies how spaces become active information partners. Instead of passively waiting for input, they support users through contextual understanding – visualized by glowing lines and dynamic layers in the room. Interaction becomes more fluid, natural, and efficient. In the next section, we look at how adaptive AI layers make such systems even more precise and predictive, enabling them to support complex workflows proactively.

How adaptive AI layers make interactions more precise and predictive

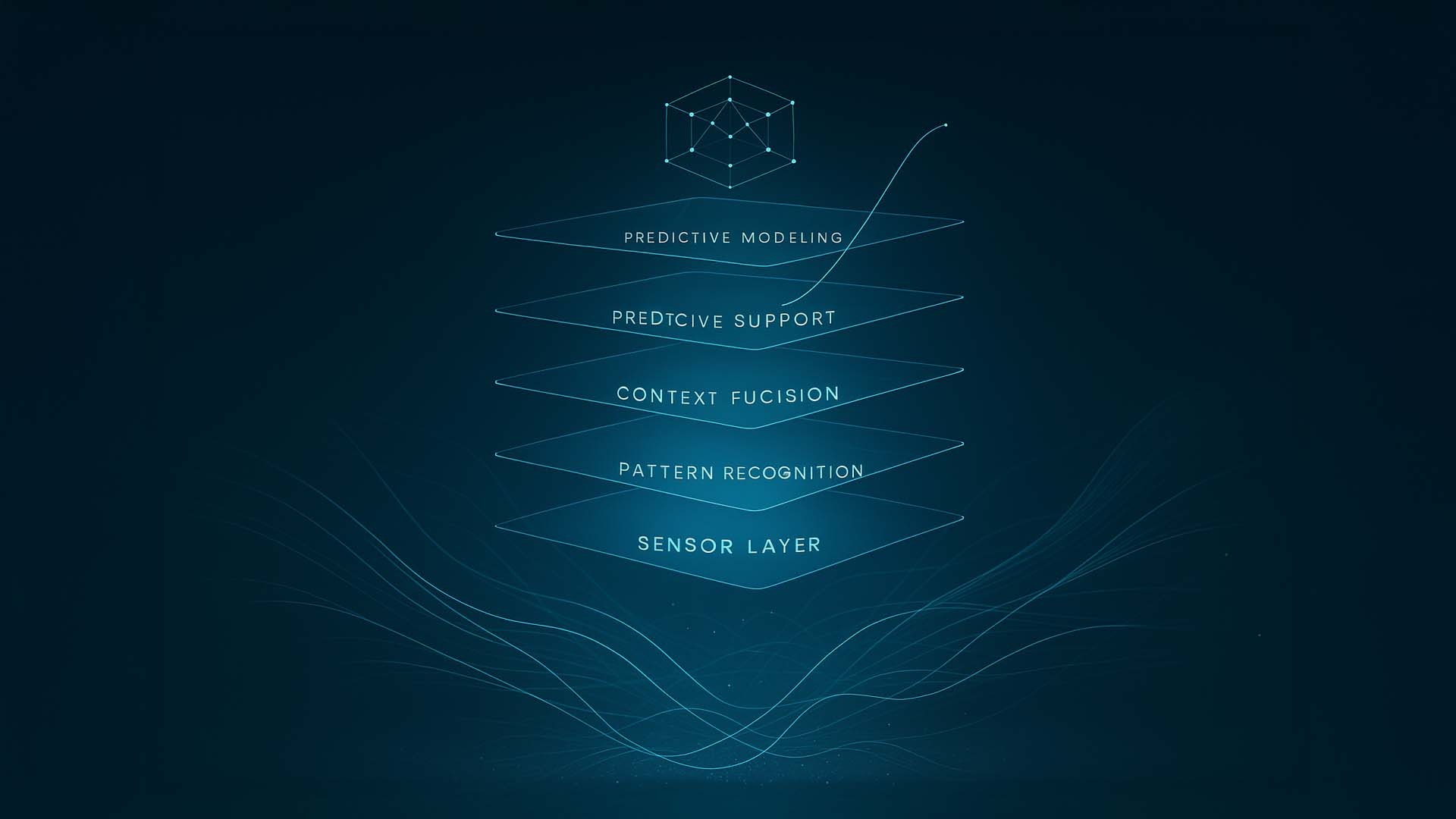

Before a spatial interaction system can respond at all, many invisible steps must run in the background. Every gesture, movement, and spatial signal is processed by a multi-layered AI system that recognizes patterns, interprets context, and anticipates future actions. This layering is the true core of next-generation spatial computing and determines whether interactions merely function or feel genuinely natural.

The newly created visualization makes this invisible process clearly understandable for the first time. It shows how multiple AI layers are stacked and how raw sensor data becomes meaningful, intelligent, and predictive interaction behavior. Each layer has its own function – and together they create a kind of “digital nervous system” that not only detects behavior but understands it.

- Sensor Layer → The lowest layer collects raw data: position points, movement curves, depth, and speed. This is the foundational material for all further interpretation.

- Pattern Recognition → This layer identifies recurring patterns in movement: typical gestures, sequences, directional changes, and micro-reactions. Chaotic data becomes recognizable structure.

- Context Fusion → The third layer fuses movement data with spatial environment, task, and situation. The AI understands where the interaction is happening, what context is relevant, and what meanings arise.

- Predictive Modeling → Here prediction begins. The AI calculates what movements are likely to follow, which goals the user is pursuing, and which options make sense. Behavior becomes expectation.

- Predictive Support → The top layer turns this evaluation into concrete support. Interfaces adjust their position, important options move closer, reactions are accelerated or pre-prepared.

Adaptive AI Layer Stack: The visualization shows five AI layers – from sensor data capture to predictive decision support.

Visualization: © Ulrich Buckenlei | Visoric GmbH, 2025

The image depicts the layers as floating semi-transparent planes – each clearly separated yet part of a connected process. At the bottom, flowing motion lines form the raw data the AI reads. Above them lie layers of interpretation: pattern recognition, context fusion, and predictive models. At the top floats the Predictive Support layer, illustrating how the system can stay one step ahead – not dominating, but intelligently assisting.

This illustrates how modern spatial systems do not just react but act. The more the AI understands, the less the user must consciously control. Systems begin to anticipate needs, structure workflows in advance, and simplify complex tasks through intelligent pre-selection. This creates a work mode in which interactions become not only faster but also mentally easier.

In the next section, we examine how these predictive AI models support not just individual gestures but entire workflows – from industrial processes to training environments where the system recognizes errors before they occur.

How AI supports entire workflows predictively

While adaptive AI layers can precisely interpret individual movements and gestures, their greatest potential unfolds on the level of complete workflows. Modern systems no longer analyze only snapshots; they understand processes in context: which steps follow each other, where risks arise, when support is needed, and how tasks can be accelerated without compromising safety or quality.

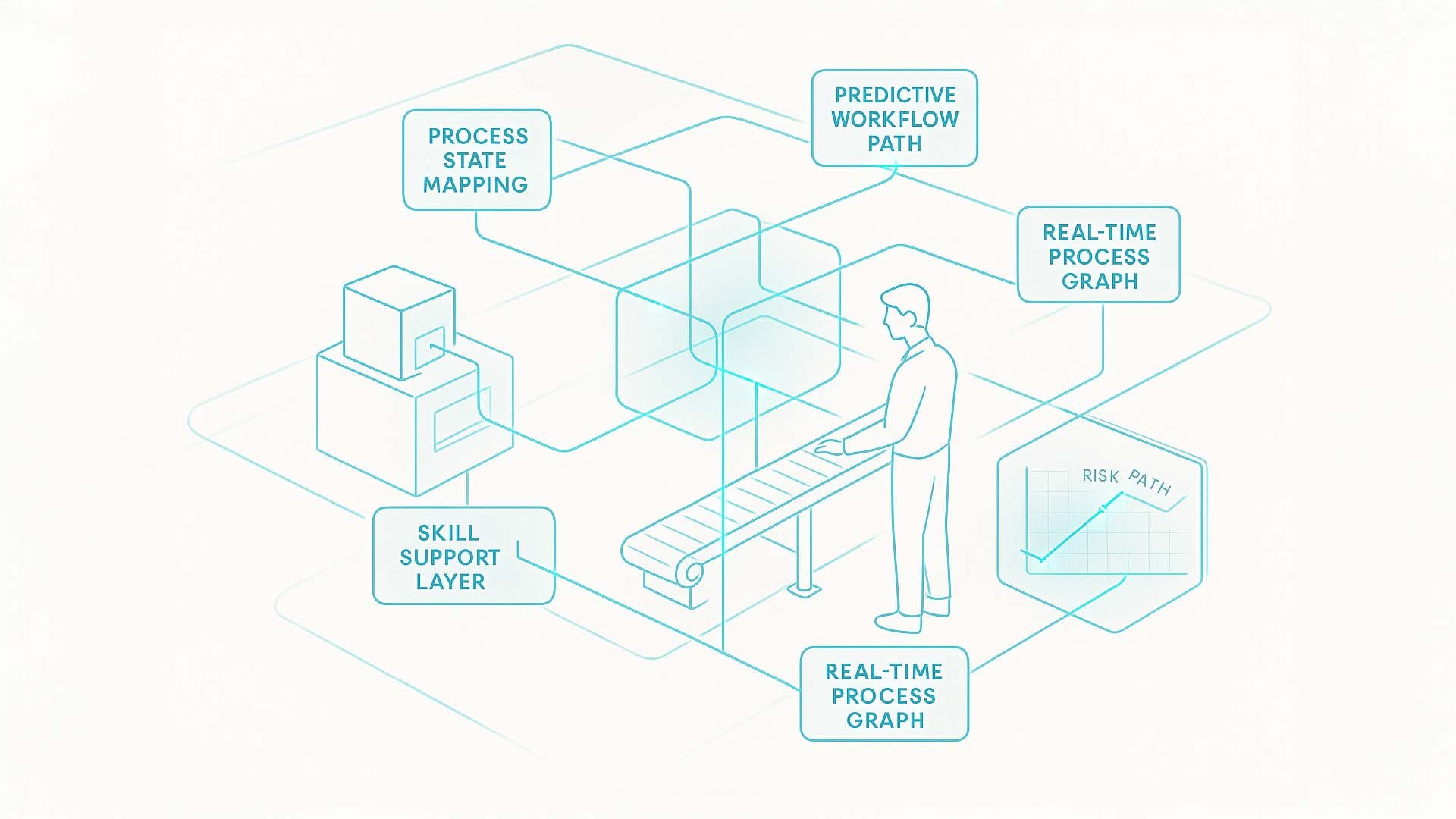

The new technical illustration shows exactly this expanded view. It represents a simplified production or training environment and reveals how AI understands, connects, and predictively controls spatial workflows. The human, the machine, the material flow – all become part of an integrated information system that recognizes patterns, forecasts developments, and intervenes situationally before errors occur.

- Process State Mapping → The AI continuously detects the current state of the system: which machine is running, which step is active, which parameters are changing.

- Predictive Workflow Path → Based on the detected state, the AI derives which process steps follow next – including alternative paths.

- Anomaly Forecast → The AI analyzes deviations in behavior and can identify potential errors before they occur.

- Skill Support Layer → An assistance layer that provides hints, recommendations, or stabilizing information depending on a person’s experience and skill level.

- Real-Time Process Graph → A visual representation of the entire process model, reacting in real time and updating with every movement.

Predictive Process Intelligence: The illustration shows how AI analyzes, forecasts, and optimizes entire workflows – from process states to real-time error detection.

Visualization: © Ulrich Buckenlei | Visoric GmbH, 2025

The illustration shows an abstracted production scene: a person stands at a conveyor belt, with machine modules to the left and right feeding data into the AI layers. Fine lines and transparent information frames float over and between objects, illustrating how AI links different sources. Terms like Process State Mapping and Real-Time Process Graph symbolize how the system understands the current state. Terms like Predictive Workflow Path and Anomaly Forecast represent the next level: the ability to predict developments based on data flows.

The scene shows how AI is no longer merely reactive but proactive. It recognizes patterns before they become visible. It flags risks before they become critical. And it supports people before overload occurs. This results in interactions that are not only safer but significantly more intelligent – a crucial step forward in the evolution of industrial and creative processes.

In the next section, we look at how these predictive systems create concrete advantages in complex work environments – more efficient decisions, fewer errors, and a new way of collaborating between humans, spaces, and AI.

When AI thinks with the workspace

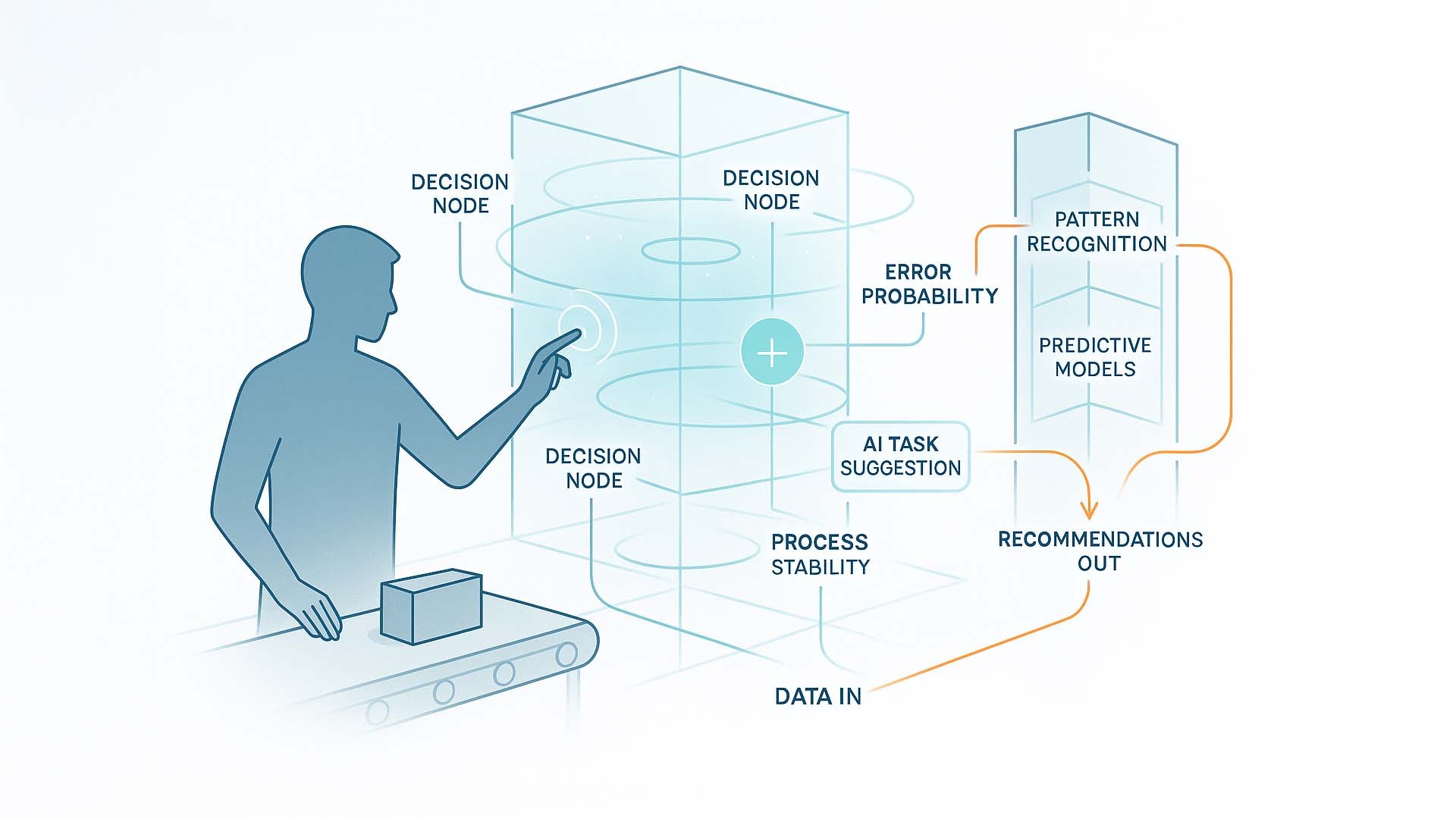

Digital interactions only become truly powerful when artificial intelligence not only reacts to gestures but actively understands context, recognizes patterns, and anticipates action steps. The newly created visual shows exactly this transition: from pure motion control to a system that connects human behavior, spatial processes, and machine data into a single intelligent decision flow.

In the illustrated scene, a person works at a conveyor belt. Above the work area floats a volumetric interface defining multiple decision points. These so-called Decision Nodes act as spatial markers where the system understands that a specific action is about to occur – such as inspecting an object or triggering a process step.

- Decision Nodes → Points where the system recognizes human action paths

- Error Probability → Estimates the error risk of the current task

- AI Task Suggestion → AI-generated suggestions for the next optimal step

- Process Stability → Real-time evaluation of the overall process stability

- Data In → Sensor data, movement patterns, and context information are fed in

- Pattern Recognition → Identifies patterns in behavior, object condition, or process flow

- Predictive Models → AI predicts likely developments and implications

- Recommendations Out → The system outputs concrete action recommendations

The interplay of spatial interaction and AI: The workspace becomes the human’s cognitive partner.

Visualization: © Ulrich Buckenlei | VISORIC GmbH 2025

The graphic shows how the workspace itself becomes an intelligent decision surface. Each movement of the hand changes the system status, each object generates data points, and each variation in the workflow creates new patterns interpreted by Pattern Recognition. The Predictive Models compute in real time what consequences a decision might have – from quality deviations to potential process errors.

The mechanism of Error Probability is particularly central. This circular layer in the interface indicates the likelihood that the current step may lead to a deviation. When a threshold is reached, AI Task Suggestion activates automatically: the system proposes what should be done next to ensure stability.

The entire working environment becomes not a passive tool but an intelligent partner. The person still decides – but supported by AI that detects risks before they arise and optimizes workflows proactively. The result is a measurable advantage in complex production, service, or training scenarios: fewer errors, faster decisions, and far more intuitive collaboration between humans and digital space.

In the next section, we explore how these systems generate immediate efficiency gains in highly dynamic environments – such as factory floors, maintenance areas, or training simulations – and how AI-based recommendations reshape teamwork between humans and technology.

Efficiency in motion: How AI supports teams in dynamic workspaces

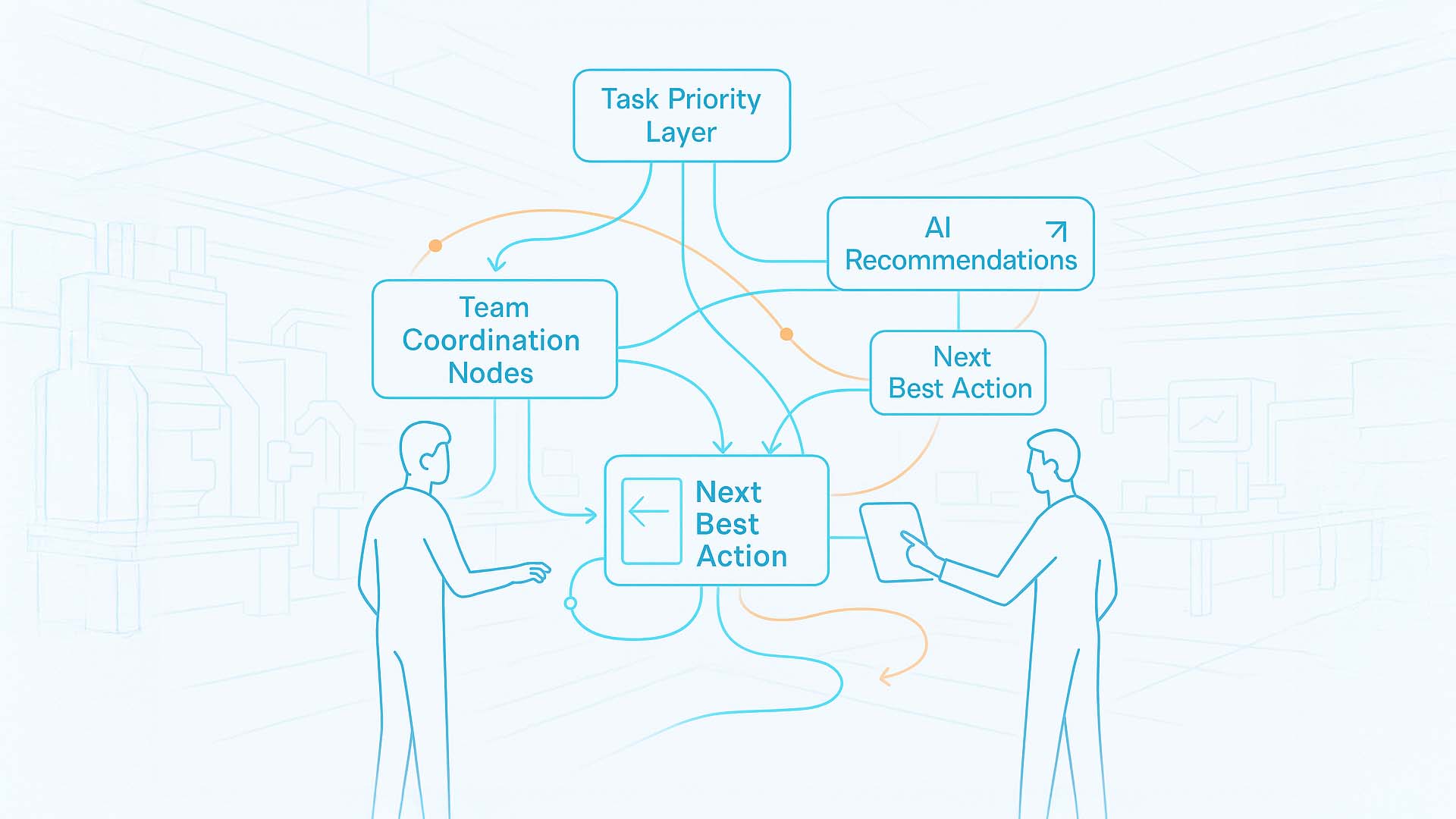

In highly dynamic environments – from production lines to maintenance departments to training situations – success often depends not on a single action but on the interplay of many small decisions. This is where AI unfolds its full potential: it recognizes patterns, coordinates activities between people, and helps teams work together faster and more precisely. The newly created visual shows this approach in a clearly structured, easy-to-understand way: the workspace becomes a shared decision surface where humans, machines, and AI layers interact.

Team-oriented AI support: The illustration shows how AI prioritizes, coordinates, and generates action recommendations for teams in real time.

Visualization: © Ulrich Buckenlei | VISORIC GmbH 2025

The image shows two schematic users – left and right – interacting with a shared spatial information system. Between them runs a network of lines, nodes, and UI elements visualizing the flow of information. The terms in the graphic represent key functional areas of modern AI assistance systems.

- Task Priority Layer → This layer analyzes in real time which tasks are currently most important and displays priorities before they need to be communicated verbally.

- Team Coordination Nodes → Node points showing how activities of multiple people are linked. They visualize which person takes the next step and how the workflow shifts.

- AI Recommendations → Dynamic suggestions adapted to context and situation. They present possible action paths that lead to higher efficiency or lower error rates.

- Next Best Action → The concrete action recommendation that results from analyzing environment, behavior, and process. This recommendation may differ depending on the situation – and is displayed directly in space.

- Workflow Dynamics → Curved lines and movement patterns showing how processes change when a team member makes a decision. AI visualizes how the process logic evolves.

- Real-Time Efficiency Graph → A small but central element. This graph shows the immediate impact of decisions on overall performance – a measurable indicator of team effectiveness.

The illustration shows: efficiency does not arise from faster individual actions, but from intelligent coordination in space. Through Team Coordination Nodes, both people intuitively know which steps they should take together. The AI Recommendations and Task Priority Layer adapt to changes in seconds, preventing delays or duplicate work. The resulting Next Best Action becomes visible for all participants – exactly where it is needed.

The result: fewer misunderstandings, fewer errors, higher process quality, and a much faster workflow. AI does not replace human teams but becomes their shared predictive decision authority.

In the next section, we show a real demonstration video that makes the concepts described above visible in action. It shows how AI-based environments capture human movement in real time, interpret it, and convert it into spatial interaction. What has been theoretical becomes immediately tangible.

Digital movement becomes the interface – the video

In the previous section, we explored how AI understands and predicts workspaces, teams, and workflows in real time. But the foundation of all these developments begins elsewhere: in the ability to interpret human movement as a high-resolution, machine-readable signal. The following video shows one of the most impressive demonstrations of this technology today – a live system that recognizes volumetric movement, analyzes it, and processes it as a dynamic data stream.

What becomes visible here is not an effect or animation. It is the technical foundation of the next interaction generation: environments that understand human behavior before traditional interfaces come into play. This machine-readable movement is what enables adaptive safety systems, spatial robotics, and AI-native workspaces.

Demonstration of real-time volumetric movement: motion data as machine-readable structure.

Technology: augmenta.tech · Processing: TouchDesigner · Filming: studio.lablab · Voiceover: Ulrich Buckenlei · Fair Use for analytical purpose

This video forms the bridge between research, industrial practice, and the AI-supported interaction models described above. It not only shows how movement is analyzed – it shows how the entire concept of interaction is changing. Once behavior becomes machine-readable, a completely new class of digital systems emerges: fast, predictive, spatial, and autonomous.

In the final chapter, we look at how companies can already use these developments today – and how the VISORIC expert team in Munich supports organizations in taking their first concrete steps into this new generation of spatial interaction.

The VISORIC expert team in Munich

The developments described in this article – from spatial interaction to AI-supported assistance systems to predictive workflows – do not emerge in a vacuum. They grow where research, design, software development, and spatial visualization are consistently brought together. This is exactly the approach of the VISORIC expert team in Munich.

Our focus is to prepare complex future technologies in a way that makes them immediately usable for companies. Whether building spatial interaction models, integrating AI processes into industrial workflows, or designing intuitive 3D interfaces, the key is always combining technical depth with practical applicability.

- Strategic development → Analysis of processes, spaces, and potentials for AI-supported interaction

- Spatial interface design → Development of clear volumetric UI logic for production, training, and service

- Technical implementation → Integration of AI layers, real-time motion intelligence, and spatial computing workflows

- Prototyping & scaling → From early demonstrators to enterprise-ready solutions

The VISORIC expert team: Ulrich Buckenlei and Nataliya Daniltseva discussing AI-supported interaction, spatial computing, and future workflows.

Source: VISORIC GmbH | Munich 2025

The team photo stands for our working philosophy: we combine precision in detail with a view of the bigger picture. Many projects start with first visual sketches or technical models and gradually evolve into robust immersive systems that create real value. A central factor is that we never view technologies in isolation but always in their spatial, organizational, and human context.

If you are considering developing your own systems for spatial interaction, AI-supported analysis processes, or immersive product development, we are happy to support you. Even an initial conversation can be enough to identify potentials and outline concrete next steps.

Contact Persons:

Ulrich Buckenlei (Creative Director)

Mobile: +49 152 53532871

Email: ulrich.buckenlei@visoric.com

Nataliya Daniltseva (Project Manager)

Mobile: +49 176 72805705

Email: nataliya.daniltseva@visoric.com

Address:

VISORIC GmbH

Bayerstraße 13

D-80335 Munich